TL;DR: Why Databricks DLT Transforms Data Governance

- Automatic quality enforcement through built-in expectations catches bad data before it reaches production, preventing the $12.9M average annual loss from poor data quality (Gartner)

- 60-70% reduction in audit preparation time through automated lineage tracking across all transformations and data flows, satisfying SOX, HIPAA, and GDPR requirements

- Production-grade quarantine patterns preserve 100% of data for auditing while ensuring only validated records reach business users

- Zero-configuration lineage integrates with Unity Catalog for column-level traceability across workspaces

- 3x faster model deployment as organizations move from experimentation to production AI systems with governed pipelines

The Wake-Up Call Every Data Leader Faces

I remember the 3 AM phone call like it was yesterday. Our quarterly financial report had gone out with incorrect revenue figures, and the CEO wanted answers immediately. After hours of investigation, we traced the problem back to a data quality issue in one of our ETL pipelines; bad data had silently propagated through multiple transformations before landing in executive dashboards.

The cost? Beyond the embarrassment and emergency board meeting, we spent weeks manually auditing every data transformation, questioning every number, and rebuilding trust with stakeholders. According to Gartner research, organizations lose an average of $12.9 million annually to poor data quality issues just like this one.

That incident became my turning point. I realized that traditional approaches to data governance, treating it as an afterthought or a separate compliance exercise, were fundamentally broken. What we needed was governance baked into the fabric of our data infrastructure itself.

Quick Answer:

Databricks Delta Live Tables automates Databricks data governance by embedding quality checks, lineage tracking, and compliance controls directly into pipelines, reducing audit preparation time by 60-70% and preventing data incidents before production.

What is Databricks Delta Live Tables for Data Governance?

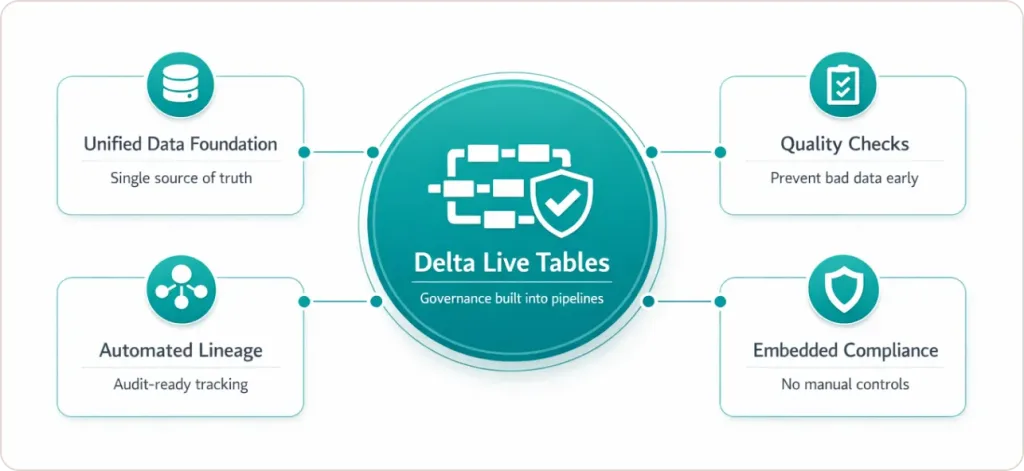

Databricks Delta Live Tables (DLT) is a declarative ETL framework that embeds data governance capabilities directly into pipeline code through automated lineage tracking, built-in quality expectations, and seamless Unity Catalog integration. Unlike traditional ETL tools that treat governance as an afterthought, Databricks DLT makes data quality and compliance checks part of the write transaction itself.

The $12.9 Million Problem: Why Traditional Governance Fails

According to Gartner research, poor data quality costs organizations an average of $12.9 million annually. Traditional data governance with Databricks faces four critical failures:

1. Invisible Data Lineage Creates Audit Nightmares

Traditional ETL pipelines offer zero native lineage tracking. When Virgin Australia Airlines needed to trace baggage data during audits, their team spent days manually documenting transformations. Without automated lineage, compliance teams face archaeological expeditions through code repositories.

Financial services firms under SOX 404 must prove data integrity for every transformation, while healthcare providers under HIPAA must demonstrate exactly where patient data flows. Without automated lineage, organizations face regulatory risk every quarter.

2. Reactive Quality Checks Let Bad Data Reach Production

Most teams implement quality validation after data is written to tables. Shell’s data engineering team experienced this when sensor data with negative temperature readings propagated through their IoT pipeline. By the time quality checks flagged the issue, three reports had generated invalid metrics, taking eight hours to diagnose and correct.

3. Manual Governance Processes Don’t Scale

As Databricks customers increased production ML models 11x year-over-year, manual governance collapsed. Data engineers at one healthcare provider spent 40% of their time updating lineage documentation and validating quality rather than building new analytics capabilities.

4. Fragmented Tools Create Security Gaps

Before Unity Catalog, users relied on external metastores and cloud IAM for access control. This fragmentation led to inconsistent permissions and security gaps. The Los Angeles County Auditor-Controller faced a patchwork of 41 different systems with no unified governance layer.

What Makes Databricks Delta Live Tables Different

Delta Live Tables fundamentally reimagines how we build and govern data pipelines. Instead of treating governance as a separate layer that you bolt on afterward, DLT embeds it directly into the pipeline definition itself.

Think of it this way: traditional ETL is like building a house and then hiring an inspector to check it after completion. DLT is like having building codes and quality checks embedded in the construction process itself, ensuring every step meets standards before the next one begins.

How Databricks Data Governance Solves These Challenges

Databricks data governance through Delta Live Tables fundamentally reimagines governance by embedding it directly into pipeline definitions. Virgin Australia Airlines proves this transformation, achieving:

- 75% increase in real-time data availability

- 44% decrease in mishandled baggage incidents

- 25% revenue boost from analytics-driven insights

- Near-real-time baggage reconciliation replacing batch processes

The Three-Layer Governance Architecture

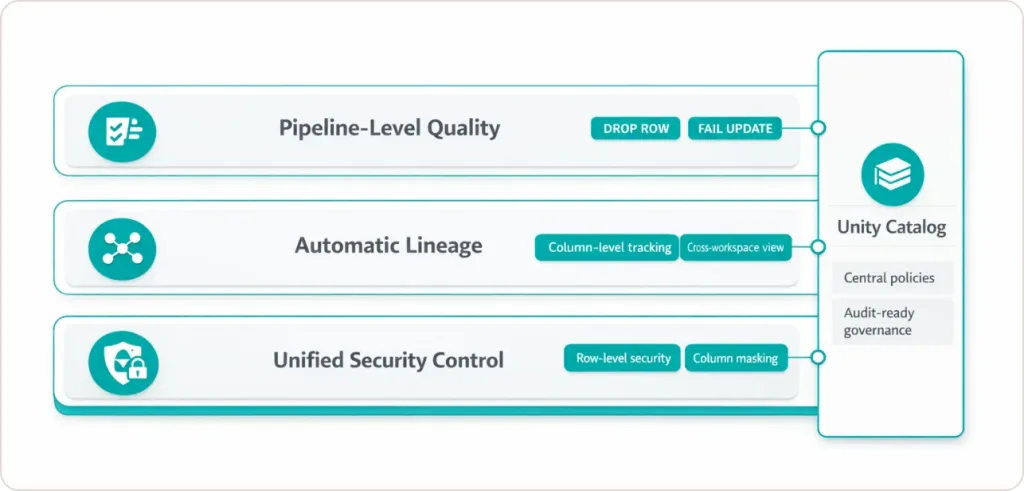

Layer 1: Pipeline-Level Quality Enforcement

Databricks DLT Expectations are declarative data quality rules that execute during write transactions, preventing bad data before it lands in production:

CREATE LIVE TABLE validated_transactions (CONSTRAINT valid_amount EXPECT (transaction_amount >= 0) ON VIOLATION DROP ROW, CONSTRAINT valid_customer EXPECT (customer_id IS NOT NULL) ON VIOLATION FAIL UPDATE) AS SELECT * FROM raw_transactions |

|---|

This demonstrates three enforcement actions:

- DROP ROW: Filters invalid records automatically

- FAIL UPDATE: Halts pipeline if critical fields are missing

- WARN: Logs violations without blocking data flow

The TPC-DI benchmark revealed Databricks DLT caught a data generator bug that existed for eight years across all other implementations.

Layer 2: Automatic Lineage as Code

Databricks Delta Live Tables generates lineage graphs automatically as you define transformations. Every table dependency and data flow is captured without additional instrumentation.

For Virgin Australia Airlines, auditors could visualize exactly how baggage data flowed from point-of-sale through bronze ingestion, silver validation, and gold reporting layers. What previously required three weeks of documentation review now took hours.

When integrated with Unity Catalog, data governance capabilities extend to:

- Column-level tracking showing which upstream fields contribute to each metric

- Cross-workspace visibility aggregating lineage across environments

- One-year retention providing historical audit trails

Understanding the differences between data lake, data warehouse, and data lakehouse architectures helps contextualize where DLT fits in modern data platforms.

Layer 3: Unified Security Control Plane

The breakthrough came when Databricks integrated DLT with Unity Catalog. Unity Catalog provides centralized access control, auditing, lineage, quality monitoring, and data discovery capabilities across Databricks workspaces. Now, every table produced by Databricks DLT automatically inherits centralized governance policies:

- Row-Level Security: Financial firms enforce that regional managers only see their geography’s transactions

- Column Masking: Healthcare providers redact patient identifiers for researchers

- Attribute-Based Access Control: Policies apply dynamically based on user roles

Organizations implementing data governance with Databricks report a 60-70% reduction in audit preparation time because lineage, access controls, and quality metrics are automatically maintained.

Core Data Governance Capabilities of Databricks DLT

1. Automated Data Lineage

Databricks DLT automatically generates directed acyclic graphs (DAGs) showing how data flows through transformations. When Ahold Delhaize USA needed to trace promotion effectiveness, DLT’s lineage graph showed exactly how sales data flowed from stores to executive dashboards.

For regulatory compliance:

- SOX 404: Financial transformations are fully documented

- GDPR: Personal data flows are traceable from collection to deletion

- HIPAA: Patient data movement is monitored at column-level granularity

2. Built-in Data Quality Controls

Delta Live Tables can prevent bad data from flowing through data pipelines using validation and integrity checks in declarative quality expectations. The three enforcement levels are:

| Action | Behavior | Use Case |

|---|---|---|

| WARN | Records pass through, violations logged | Monitor data drift without blocking |

| DROP ROW | Invalid records filtered before write | Standard cleaning for non-critical fields |

| FAIL UPDATE | Pipeline halts immediately on violation | Critical rules where bad data is unacceptable |

Advanced Pattern: Production Quarantine Tables

# Main pipeline with validation @dlt.table @dlt.expect_or_drop(“valid_revenue”, “revenue > 0”) def silver_sales(): return dlt.read(“bronze_sales”) # Quarantine table with inverted logic @dlt.table def quarantine_sales(): return ( dlt.read(“bronze_sales”) .where(“revenue <= 0”) .withColumn(“quarantine_reason”, “invalid_revenue”) .withColumn(“quarantine_timestamp”, current_timestamp()) ) |

|---|

This approach delivers 100% data preservation for audit trails, clean production tables with only validated records, and root cause analysis through quarantine metadata.

3. Unity Catalog Integration

What does Databricks use to handle data governance and security? Unity Catalog offers a single place to administer data access policies that apply across all workspaces in a region, with standards-compliant security based on ANSI SQL.

Row-Level Security:

CREATE OR REPLACE FUNCTION filter_region(region STRING) RETURN region = current_user_region(); ALTER TABLE silver_transactions SET ROW FILTER filter_region(region); Column Masking for PII: CREATE OR REPLACE FUNCTION mask_email(email STRING) RETURN CASE WHEN is_member(‘admins’) THEN email ELSE ‘***@’ || substr(email, strpos(email, ‘@’) + 1) END; ALTER TABLE customers ALTER COLUMN email SET MASK mask_email(email); |

|---|

4. Cost-Optimized Compliance

In The Memory, a retail technology company, quantified Databricks DLT’s impact: platform automation saved one full-time equivalent in pipeline maintenance (approximately $150,000 annually). For a healthcare provider managing 500+ pipelines, DLT eliminated 40% of the engineering time previously spent on documentation and validation.

Databricks AI Governance: Extending to Machine Learning

As organizations deploy 11x more ML models year-over-year, Databricks AI governance extends beyond data pipelines. The framework addresses this with three pillars:

1. Model Lineage and Versioning

Every model in MLflow traces back to the Databricks DLT pipelines that produced training data. When fraud detection performance degrades, data scientists identify exactly which upstream quality issue caused the drift.

2. Feature Store Governance

Features computed in Databricks Delta Live Tables are automatically cataloged with training data lineage, computation logic, and version history.

3. AI Trust Metrics

According to Gartner, AI trust is the #1 technology trend for 2024. By 2026, organizations operationalizing AI transparency will achieve 50% higher adoption rates. Databricks data governance unifies data and AI governance for end-to-end traceability.

Real Example: Banco Bci’s Transformation

Banco Bci built a modern platform using Databricks DLT and Unity Catalog, achieving real-time transaction enrichment for personalized banking, customized loan models with complete audit trails, and significant operational efficiency improvements. (Source)

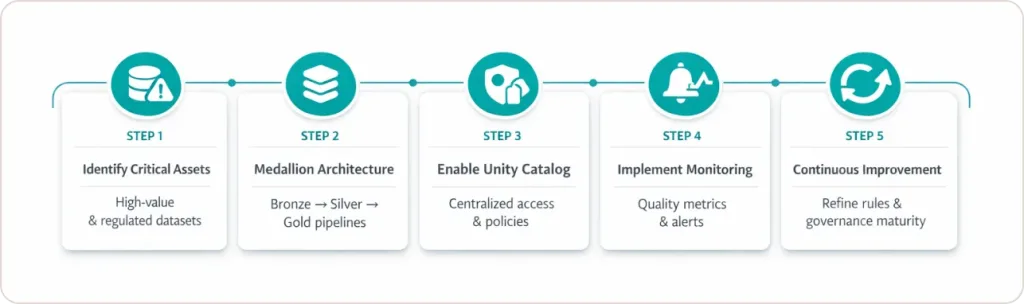

Implementation Roadmap: 5 Steps

Step 1: Identify Critical Assets

Start with datasets having the highest business value and regulatory risk: customer PII, financial transactions, healthcare patient records, and supply chain metrics. Define quality standards for each asset with specific validation rules.

Building a comprehensive data strategy roadmap helps align these technical requirements with business objectives.

Step 2: Build Medallion Architecture

Implement Bronze-Silver-Gold pattern with Databricks Delta Live Tables:

Bronze Layer: Raw data ingestion with source fidelity preserving all data exactly as received

Silver Layer: Validated, cleansed data applying Expectations for quality enforcement

Gold Layer: Business-ready analytics tables with final business logic transformations

Step 3: Enable Unity Catalog

Configure Databricks data governance with access controls. When creating DLT pipelines, select Unity Catalog as the destination and configure security using governed tags:

— Tag sensitive columns

ALTER TABLE customers ALTER COLUMN ssn SET TAGS (‘pii’ = ‘high’); |

|---|

— Create policy based on tags

CREATE FUNCTION mask_pii_high(value STRING) RETURN CASE WHEN is_member(‘compliance_team’) THEN value ELSE ‘REDACTED’ END; |

|---|

Step 4: Implement Monitoring

Query Databricks DLT event logs for quality metrics:

SELECT dataset, expectation, failed_records, passed_records FROM event_log(‘my_dlt_pipeline’) WHERE event_type = ‘data_quality’ |

|---|

Set up alerts for expectation failures, pipeline errors, and schema drift. Our data visualization services make governance insights accessible to business stakeholders.

Step 5: Continuous Improvement

Schedule weekly reviews of quarantine tables to analyze patterns in rejected data, expectation metrics to refine thresholds, and lineage usage to identify most-traced data flows. Banco Bci conducted quarterly governance maturity assessments, measuring audit preparation time reduction and user satisfaction.

Real-World Success Stories

Virgin Australia Airlines: Operational Excellence

Solution: Migrated to Databricks with DLT, implementing near-real-time baggage reconciliation and Unity Catalog governance. (Source)

Results:

- 75% increase in real-time data availability

- 44% decrease in mishandled baggage

- Automated lineage enabled root cause analysis in minutes vs. hours

Los Angeles County: Public Sector Transformation

Challenge: Fragmented data across 41 departments prevented efficient management.

Solution: Implemented Databricks Delta Live Tables for centralized repositories and robust visualizations.

Results: Real-time annual statistics reporting, comprehensive budget reporting automation, and unified security policies replacing 41 systems. (Source)

Strategic Considerations

When Databricks DLT is Right

Ideal Scenarios:

Building new platforms requiring embedded governance, modernizing legacy ETL with governance gaps, scaling to enterprise AI/ML programs, and highly regulated industries (finance, healthcare, government).

Decision Framework

Ask: “What’s more expensive – compute costs or engineer time?”

For most enterprises, Databricks data governance automation justifies compute costs through 1 FTE savings minimum in operational overhead, 60-70% reduction in audit preparation time, 3x faster model deployment velocity, and prevention of $12.9M annual average data quality costs.

If you’re evaluating modern data governance approaches, our team has helped organizations across industries implement governed pipeline architectures. Let’s discuss your specific requirements and explore whether DLT fits your governance maturity journey.

Conclusion: The Future is Automated

Data governance has reached an inflection point. Organizations deploying 11x more ML models year-over-year cannot scale governance through manual documentation. Databricks Delta Live Tables represents the paradigm shift toward proactive, automated governance.

The evidence is clear: 75% increase in real-time data availability for Virgin Australia, a 60-70% reduction in audit preparation time across enterprises, and 1+ FTE savings in pipeline maintenance costs annually.

Your Next Steps

Evaluate your current governance maturity with these questions:

Can you trace production metrics to source data in under 10 minutes? Do quality checks run before or after data writes to production? What percentage of an engineer’s time goes to maintenance versus building capabilities?

If these questions reveal gaps, you’re not alone, but you can’t afford to wait. Every day with ungoverned pipelines accumulates technical debt and regulatory risk.