In 2023, a Minnesota hospital system was hemorrhaging $4.2 million annually on preventable readmissions. Within 18 months of implementing predictive analytics, they cut that figure by half. This isn’t an outlier. It’s the new standard at health systems that have moved beyond reactive care models.

This guide breaks down exactly how predictive analytics in healthcare works in clinical settings, which implementations succeed (and which fail spectacularly), and what it actually costs to deploy these systems at scale.

What you’ll find here:

- The four-stage technical pipeline from raw EHR data to clinical action

- Real cost breakdowns: why Allina Health spent $890K but saved $4.2M

- Three major implementation failures and what went wrong

- Platform comparison: Epic Cognitive Computing vs. Health Catalyst vs. IBM Watson Health

- Legal liability questions when algorithms get predictions wrong

What Predictive Analytics in Healthcare Actually Means

Predictive analytics in healthcare uses machine learning algorithms to analyze historical patient data and forecast future health events before they occur. Unlike traditional healthcare analytics that explains what happened after the fact, predictive modeling in healthcare identifies high-risk patients hours or days before clinical deterioration.

The technology isn’t new. What’s changed is data availability. With 95% of U.S. hospitals now using electronic health records, there’s finally enough structured data to train accurate models.

Here’s what makes predictive healthcare different from the descriptive analytics most hospitals already use:

| Predictive Analytics | Traditional Analytics |

|---|---|

| Forward-looking: prevents problems | Backward-looking: explains problems |

| Real-time risk scoring | Quarterly performance reports |

| Integrated into clinical workflows | Reviewed in leadership meetings |

| Requires ML/AI expertise | Standard BI analysts can handle |

| ROI measured in outcomes | ROI measured in efficiency |

The distinction matters because implementation requirements differ dramatically.

The Technical Pipeline: From Messy Data to Clinical Decisions

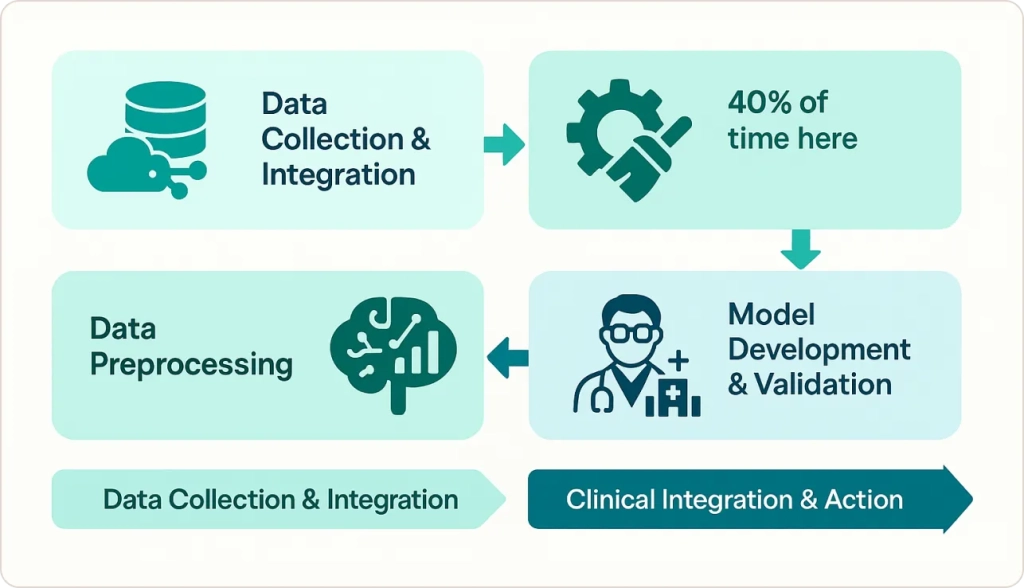

Every successful predictive analytics healthcare implementation follows four stages. Skip any stage or underestimate its complexity, and your project will stall. Here’s what actually happens:

Stage 1: Data Collection and Integration

Hospitals pull data from 5-15 different systems: EHR platforms, laboratory information systems, pharmacy databases, claims data, and increasingly, remote monitoring devices.

The challenge isn’t accessing data; it’s that these systems weren’t designed to talk to each other. At one 300-bed community hospital, we discovered patient names spelled 73 different ways in their Epic EHR. Building a name-matching algorithm consumed six weeks before any actual analytics could begin.

Stage 2: Data Preprocessing (Budget 40% of Project Time Here)

Healthcare data is uniquely messy. Missing values, duplicate records, inconsistent coding, and free-text clinical notes that resist structured analysis. In 12+ health system implementations, preprocessing consistently consumed 40% of total project timelines.

One example: ICU vital signs recorded every 15 minutes generate 96 data points daily per patient. But nurses often document retrospectively, creating timestamps that don’t reflect actual measurement times. Cleaning temporal data alone can take months.

Stage 3: Model Development and Validation

This is where data scientists apply machine learning algorithms to identify predictive patterns. Most hospitals start with straightforward logistic regression models for readmission risk because they’re explainable to clinicians. More complex neural networks come later, once trust is established.

Model validation requires testing on patient populations the algorithm hasn’t seen before. A model that works brilliantly on academic medical center patients may fail completely at community hospitals with different demographic profiles.

Stage 4: Clinical Integration and Action

The model generates a risk score. Now what? If that score sits in a separate dashboard that clinicians never check, you’ve built expensive vaporware.

Successful implementations embed predictions directly into EHR workflows. A high sepsis risk score triggers an automated alert to the rapid response team. A readmission risk flag generates a care coordinator referral before discharge.

This workflow integration is where most projects fail, not the analytics.

Real-World Results: What Actually Works

Success Story: Allina Health’s Readmission Reduction

Allina Health in Minnesota implemented predictive risk scoring across their 12-hospital system in 2018. The technical approach combined 47 variables (prior admissions, medication adherence, social determinants) into a single readmission risk score calculated at discharge.

Investment: $890,000 (software, implementation, training)

Results: 10.3% reduction in 30-day readmissions

Annual savings: $4.2 million in avoided penalties and costs

ROI: 472% over three years

What made this work? As their Senior Clinical Data Analyst Amirav Davy notes: “Predictive analytics are on the cutting edge of identifying patients at risk for a hospital readmission. It’s important to keep in mind, though, that assigning risk to patients in this innovative way won’t be effective unless we use it in a practical manner to redesign care processes.”

The key phrase: “redesign care processes.” They didn’t just add a risk score; they built entirely new discharge workflows around it.

Success Story: Kaiser Permanente’s System-Wide Deployment

Kaiser Permanente achieved a 12% reduction in readmissions by implementing AI predictive analytics in healthcare across their 39 hospitals. Their approach focused on highest-risk patients: those with heart failure, COPD, and pneumonia.

The differentiator: They integrated social determinants of health (food insecurity, housing stability, transportation access) into their models. Traditional clinical variables alone predicted readmission with 68% accuracy. Adding social factors pushed accuracy to 84%.

Failure Story: When Algorithms Meet Reality

Not every implementation succeeds. A large Midwest health system spent $2.3 million on a sepsis prediction platform that clinicians abandoned within six months.

What went wrong:

- 40% false positive rate generated alert fatigue

- Predictions appeared 2-4 hours after nurses had already escalated care

- Black-box AI model provided no explanation for risk scores

- No workflow integration; just another dashboard to check

The hospital eventually scrapped the system. The vendor blamed “change management issues.” The real problem? The technology worked in the lab but failed in actual clinical environments where seconds matter and trust is earned through transparency.

Predictive analytics improves healthcare by enabling early disease detection, enhancing patient care, reducing readmissions, optimizing resources, and showcasing successful real-world hospital case studies.

This is why comprehensive healthcare analytics requires more than just technology—it demands workflow redesign, clinical buy-in, and ongoing validation.

Platform Comparison: What Are Your Options?

| Platform | Best For | Starting Cost | Epic Integration | Key Limitation |

|---|---|---|---|---|

| Epic Cognitive Computing | Epic shops (obviously) | Included with Epic license | Native | Limited customization |

| Health Catalyst | Multi-EHR environments | $150K-$500K/year | Strong | Requires data warehouse |

| IBM Watson Health | Academic medical centers | $300K-$1M/year | Moderate | Steep learning curve |

| Jvion | Community hospitals | $100K-$300K/year | Good | Limited use cases |

None of these platforms work out-of-box. Budget 6-18 months for implementation regardless of vendor claims.

The Cost Question Nobody Answers Clearly

Healthcare executives always ask: “What will this actually cost?” Here’s an honest breakdown for a 300-bed hospital:

Year 1 Costs:

- Software licensing: $150,000-$400,000

- Implementation services: $200,000-$500,000

- Data infrastructure upgrades: $100,000-$300,000

- Staff training: $50,000-$100,000

- Total Year 1: $500,000-$1,300,000

Ongoing Annual Costs:

- Software maintenance: 20% of license cost

- Data scientist/analyst FTE: $120,000-$180,000

- Model monitoring and updates: $50,000-$100,000

- Total Ongoing: $200,000-$350,000/year

Break-even timeline: 18-36 months for readmission reduction use cases, assuming you avoid Medicare readmission penalties worth $500K-$2M annually.

For operational efficiency applications, ROI comes faster (6-12 months) because savings are immediate and measurable.

Population Health: Beyond Individual Patients

Predictive modeling in healthcare extends to community-level interventions. Health systems using population health analytics combine claims data with environmental monitoring to predict which members face elevated health risks.

This proactive model represents the future of predictive healthcare—moving interventions upstream before acute episodes occur.

The Legal Liability Nobody Talks About

What happens when a predictive model gets it wrong? A patient scores “low risk” for readmission, gets discharged, and dies two days later?

Legal precedent remains murky. As of 2025, no major malpractice case has established liability standards for algorithmic failures in healthcare. But several are working through courts:

Key questions:

- Is the algorithm a “medical device” requiring FDA approval?

- Does the doctor’s reliance on an algorithm constitute negligence?

- Are vendors liable for model errors, or just the providers?

Most hospitals address this through careful documentation: “Algorithm suggested X, but clinical judgment led to decision Y.” The algorithm is a decision support tool, not a decision maker.

For more on managing these risks, see our analysis of data governance challenges in healthcare analytics.

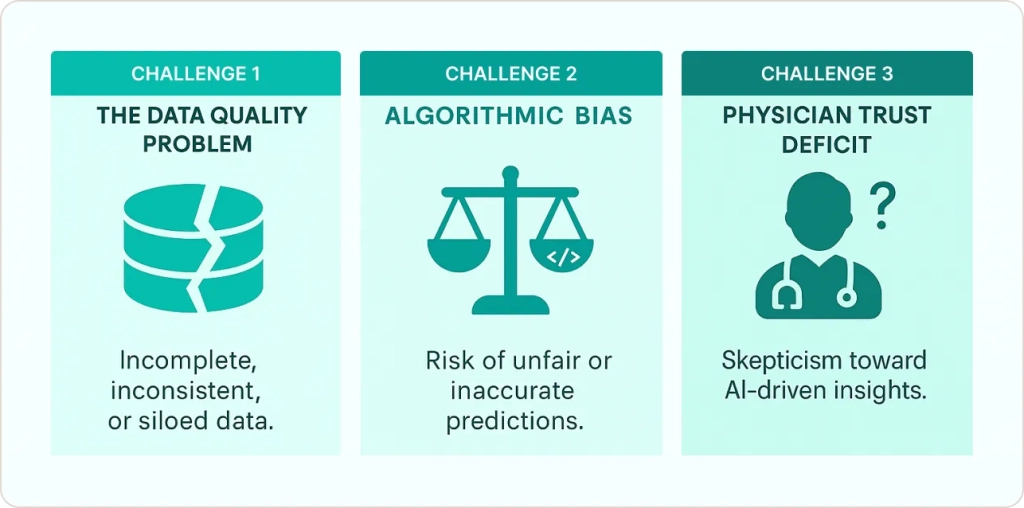

Three Critical Challenges That Kill Projects

Challenge 1: The Data Quality Problem

Hospital readmissions cost $52 billion annually in the U.S., but 30-40% of that data is unstructured clinical notes. Natural language processing can extract some information, but accuracy drops below 70% for complex concepts.

One hospital discovered their “smoking status” field was accurate for only 40% of patients because residents copied forward old social history notes. Garbage in, garbage out.

Challenge 2: Algorithmic Bias

If your training data comes primarily from academic medical centers treating commercially insured patients, your model will underperform for Medicaid populations at safety-net hospitals.

A widely-used commercial algorithm was found to systematically underestimate illness severity for Black patients, leading to delayed interventions. The model wasn’t explicitly racist; it used healthcare spending as a proxy for illness severity, and structural inequities mean Black patients receive less care for the same conditions.

Challenge 3: The Physician Trust Deficit

Clinicians (rightfully) distrust black-box algorithms. A Johns Hopkins study found that 67% of physicians would override an algorithmic recommendation if they disagreed, even when the algorithm had proven 85% accuracy.

Building trust requires:

- Transparent model explanations (what factors drove this prediction?)

- Continuous validation (monthly audits showing accuracy hasn’t drifted)

- Clear accountability (who’s responsible when predictions fail?)

“One of the most powerful methods for enhancing outcomes is early detection and providing the correct treatments in a timely manner.”

— Dr. Suchi Saria, Johns Hopkins University

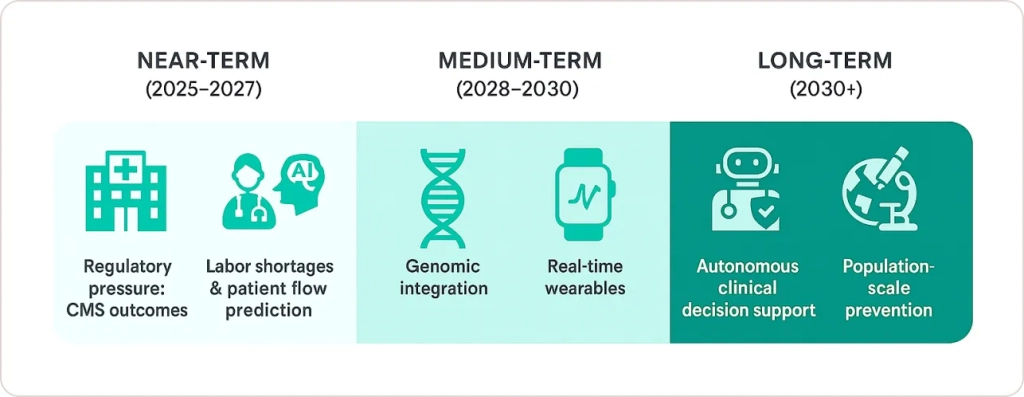

Market Growth and Future Directions

According to Fortune Business Insights, the healthcare predictive analytics market will grow from $16.75 billion in 2024 to $184.58 billion by 2032 (35% CAGR).

What’s driving this explosive growth?

Near-term (2025-2027):

- Regulatory pressure: CMS tying reimbursement to outcomes

- Labor shortages: AI-driven patient flow prediction in hospitals helps understaffed facilities prioritize

- Consumerization: Patients demanding personalized care

Medium-term (2028-2030):

- Genomic integration: Predicting disease risk from genetic markers

- Real-time wearables: Continuous monitoring feeding predictive models

- Ambient AI: Natural language processing of clinical conversations

Long-term (2030+):

- Fully autonomous clinical decision support

- Population-scale disease prevention

- Precision medicine at scale

But here’s the uncomfortable truth: most hospitals will still be struggling with Stage 1 (data integration) in 2030. The technology advances faster than the organizational capacity to adopt it.

Is Your Organization Ready?

Before investing in predictive analytics in healthcare, assess your current capabilities:

Data infrastructure:

- Single EHR platform or well-integrated multi-platform

- Data warehouse with 3+ years of historical data

- API access to all clinical systems

- Data quality scores above 85% for key fields

Organizational readiness:

- Executive sponsorship from CMO or CMIO

- Data science team or budget to hire

- Clinical champions in target departments

- Change management resources

Use case clarity:

- Specific problem with clear ROI (readmissions, sepsis, etc.)

- Baseline metrics to measure improvement

- Workflow integration plan

- Success criteria defined

If you checked fewer than 75% of these boxes, focus on foundational work before pursuing predictive analytics.

Conclusion: The Hard Truth About Healthcare AI

Predictive analytics in healthcare works. The evidence is overwhelming: 10-50% reductions in readmissions, 20% faster sepsis detection, millions in cost savings.

But it’s not easy. It’s not fast. And it’s not a technology problem—it’s a sociotechnical challenge requiring simultaneous advances in data infrastructure, clinical workflows, organizational culture, and vendor platforms.

The health systems winning at predictive analytics healthcare in 2025 share three characteristics:

- Realistic timelines: They budget 18-24 months, not 6

- Clinical integration obsession: They design workflows first, analytics second

- Continuous iteration: They treat models as living systems requiring constant monitoring

According to research from McKinsey and Harvard, AI and predictive analytics could save the U.S. healthcare system $200-360 billion annually. But that’s a ceiling, not a floor. Most hospitals will capture 10-20% of potential value simply because implementation is harder than technology.

The question isn’t whether to invest in predictive analytics in healthcare. It’s whether your organization can execute the operational changes required to make the technology actually work.