Highlights

- 73% of small-to-midsize businesses struggle with data analytics costs

- Companies using real-time insights are 23 times more likely to acquire customers

- Open-source tools and managed services eliminate need for expensive enterprise licenses

- Smart implementation strategies reduce costs by 60-80% compared to enterprise approaches

- Strategic planning prevents common pitfalls that lead to budget overruns

Introduction

The data analytics landscape just shifted dramatically, and if you’re still thinking real time analytics requires enterprise-level budgets, you’re missing the transformation I’ve been witnessing firsthand.

Cloud providers fundamentally changed who can afford real-time insights with managed streaming services and consumption-based pricing. But here’s what I’ve observed: while everyone gets excited about the cost savings, I keep seeing the same pattern from countless data analytics consulting services implementations—organizations jumping in without understanding what they’re actually getting into.

73% of small-to-midsize businesses struggle with data analytics costs, yet companies using real-time insights are 23 times more likely to acquire customers and 6 times more likely to retain them. In my experience, the organizations that succeed implement their solutions for 60-80% less than traditional enterprise approaches when they plan properly.

In this guide, I’ll show you exactly how to implement budget-friendly real-time analytics tools without sacrificing functionality. Whether you’re starting from basic reporting or evaluating a complete real-time overhaul, these insights will help you do more with less in 2025.

Why I Believe Budget-Friendly Real-Time Analytics Matters More Than You Think

Let me start with something that might surprise you: The biggest barrier to real-time analytics adoption isn’t technical complexity—it’s the misconception that you need enterprise-grade budgets to get started.

I’ve seen this assumption cost organizations millions in delayed competitive advantages. Traditional real-time setups forced massive upfront investments in specialized hardware, enterprise licenses, and dedicated engineering teams. Organizations often spent six figures just to process basic event data.

2025 changes this equation entirely. According to recent market research, the global data analytics market is expanding at a CAGR of 29.40%, driven largely by cloud-native solutions and managed services that make real-time capabilities accessible to smaller organizations.

But here’s where it gets interesting: Unlike typical cost-cutting measures that sacrifice functionality, the modern budget-friendly approaches I implement often deliver better results than expensive legacy systems.

This is because budget constraints force teams to focus on business value rather than technical sophistication.

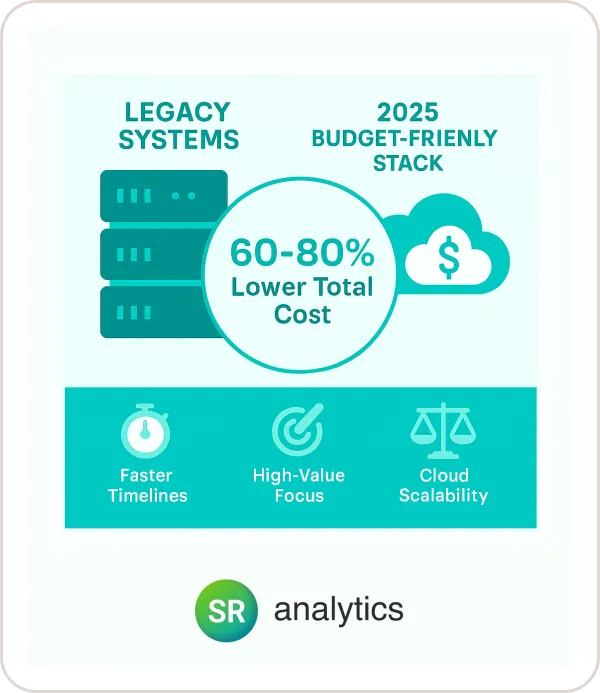

Based on implementations I’ve guided, organizations using strategically implemented real-time analytics database solutions consistently report:

- 60-80% lower total cost of ownership compared to traditional enterprise deployments

- Faster implementation timelines due to managed service adoption

- Better business alignment because budget constraints force focus on high-value use cases

- Improved scalability through cloud-native architectures

But—and this is crucial from my experience—these benefits only materialize when the implementation follows proven cost-optimization principles that most organizations miss.

The Real Cost of Getting Real-Time Analytics Wrong

Before diving into solutions, let me share the most expensive mistake I see repeatedly: the “real-time everything” approach, where companies stream every piece of data without considering whether immediate processing adds business value.

I recently worked with a manufacturing company that spent $15,000 monthly on real-time processing for quarterly compliance reports. The data didn’t change meaningfully by the minute, yet they paid for constant compute resources that delivered zero additional business value.

A simple switch to daily batch processing cut their costs by 90% with no impact on business decisions.

“We thought we needed everything in real-time,” the CTO told me during our first consultation. “It took SR Analytics to show us that only 20% of our data streams actually required immediate processing. That insight alone saved us $120,000 annually.”

Another pattern I’ve observed is tool proliferation without integration planning. Organizations start with one streaming data analytics tool, then add others for different use cases without considering how they’ll work together. This creates expensive data silos and integration costs that often exceed the original platform investments.

In my experience, the hidden costs that catch organizations off-guard include:

- Operational overhead: Self-managed systems requiring dedicated DevOps resources

- Integration complexity: Connecting multiple point solutions that weren’t designed to work together

- Skill gaps: Training teams on complex enterprise platforms when simpler solutions would suffice

- Over-provisioning: Paying for always-on capacity during low-usage periods

Based on implementations I’ve managed, organizations typically spend 40-60% more than initial estimates when they don’t plan for these hidden costs upfront.

When Real-Time Analytics Delivers Maximum ROI

Through years of implementation experience, I’ve identified that real time analytics tools deliver the most value when immediate action can significantly impact revenue or prevent losses. The sweet spot lies in scenarios where every minute of delay translates to measurable business costs.

Revenue-Generating Scenarios I’ve Seen Work:

E-commerce personalization represents one of the highest-ROI applications. I worked with an online retailer who recovered 15% of abandoned carts through real-time intervention systems that triggered immediate email campaigns and dynamic pricing adjustments. This generated an additional $200,000 in monthly revenue.

Digital advertising optimization offers another compelling use case. In my experience implementing marketing analytics solutions, real-time bid adjustments based on campaign performance prevent wasted ad spend while maximizing conversion opportunities. Organizations typically see 20-30% improvement in advertising ROI through real-time campaign optimization.

Risk Mitigation Scenarios That Pay Off:

I’ve seen fraud detection provide immediate business justification for real-time investments. Financial services companies using real-time transaction monitoring prevent an average of $2.4 million in fraudulent charges annually. Our data engineering services often include real-time fraud detection pipelines that pay for themselves within months.

System monitoring represents the defensive side that I always recommend. When a critical server goes down, every minute of downtime can cost thousands in lost revenue and customer trust. Real-time infrastructure monitoring systems typically pay for themselves within the first prevented outage.

My “Batch vs. Real-Time” Decision Framework:

I apply this simple test with every client: if acting on the data an hour later makes no meaningful difference to your business outcome, you probably don’t need real-time processing.

Clear candidates for batch processing that I always recommend:

- Executive reporting and historical trend analysis

- Monthly performance dashboards and quarterly business reviews

- Compliance reporting with regulatory timelines

- Data archiving and long-term storage operations

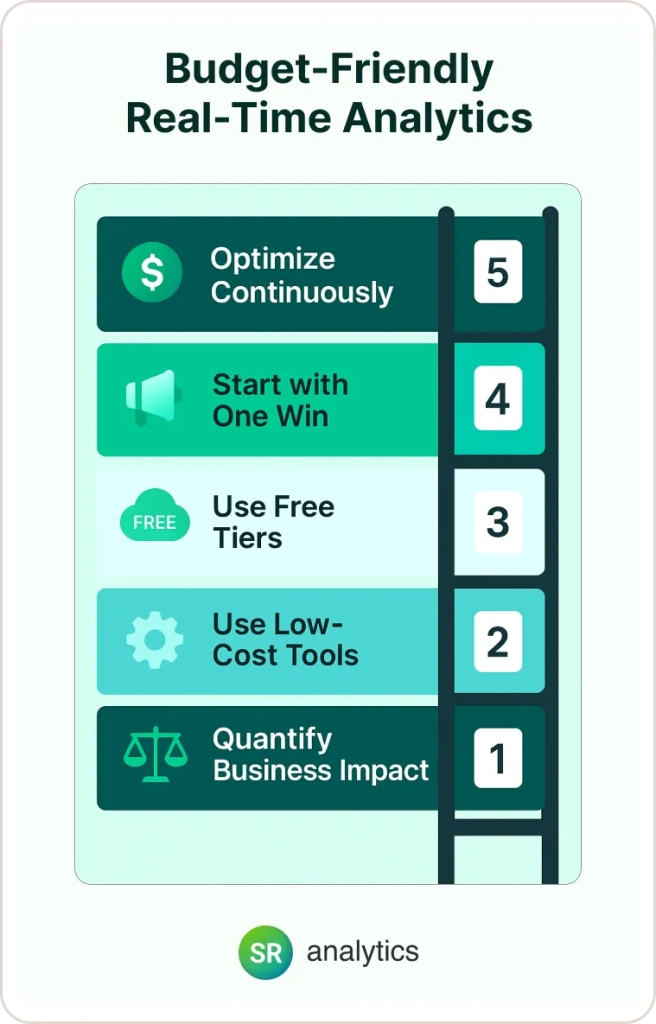

5-Step Framework for Budget-Friendly Real-Time Analytics

Step 1: Calculate Your Real-Time ROI Potential

I start every engagement with a rigorous cost-benefit analysis for each potential real time data analytics use case. The most successful implementations I’ve guided begin with clear financial justification rather than technical excitement.

My ROI Calculation Approach:

I help clients identify specific decisions this real-time data will drive and quantify the business impact of faster response times. For example, if real-time inventory alerts could prevent just one $10,000 stockout per month, you can justify up to $2,000-3,000 in monthly analytics costs while maintaining strong ROI.

Drawing from our experience helping companies like Lifepro Fitness achieve 12% profit increases, I document both revenue opportunities and risk mitigation benefits:

- Revenue opportunities: Customer churn prevention, dynamic pricing, personalized recommendations

- Cost avoidance: Fraud prevention, system downtime reduction, inventory optimization

- Operational efficiency: Automated alerting, reduced manual monitoring, faster issue resolution

Step 2: Choose Your Budget-Friendly Tech Stack

Building an effective real time analytics database doesn’t require expensive enterprise licenses. Based on dozens of implementations, I’ve identified tools that match current scale while providing clear upgrade paths.

Data Ingestion Options I Recommend:

For simple use cases, I start clients with webhook-based approaches using existing APIs. Services like Zapier or IFTTT can handle basic real-time data flows for under $50 monthly, perfect for validating concepts.

As volume grows, I recommend managed streaming services:

- Apache Kafka managed services: Amazon MSK or Confluent’s free tier

- Cloud-native options: Google Pub/Sub or Azure Event Hubs with pay-per-message pricing

- Simplified alternatives: AWS Kinesis for straightforward streaming workflows

Storage and Processing Layer:

I typically recommend open-source solutions that deliver exceptional performance at zero licensing cost:

- ClickHouse: Excellent real-time performance, cloud offerings start at $30/month

- TimescaleDB: PostgreSQL extension perfect for teams familiar with SQL

- Apache Pinot: LinkedIn’s platform for high-scale scenarios

For cloud options, I suggest leveraging platforms that our business intelligence consulting team frequently implements:

- BigQuery: Streaming inserts with automatic scaling

- Snowflake: Real-time data sharing and concurrent workload support

Step 3: Start Small with High-Impact Use Cases

The most successful implementations I’ve managed begin with a single, high-value use case rather than attempting comprehensive coverage from day one. This approach controls both costs and complexity while providing rapid proof of value.

“Start small, think big, move fast,” as one of my most successful clients put it. “SR Analytics helped us prove the concept with our inventory management system first. Once we saw the 20% reduction in stockouts, getting budget approval for additional use cases was easy.”

I help clients select pilot use cases based on clear business impact:

- Inventory management: Stockouts during peak demand periods

- Customer service: Escalation prevention through early warning systems

- Website performance: User experience optimization through real-time monitoring

- Marketing campaigns: Immediate optimization based on performance metrics

My Minimal Viable Pipeline Approach:

I build the simplest possible solution that solves the specific problem:

- Single data source: Start with one critical data stream

- Basic processing: Simple filtering and aggregation rules

- Essential alerting: Threshold-based notifications for immediate action

- Simple dashboard: Key metrics only, no complex visualizations

Step 4: Leverage Managed Services and Cloud Free Tiers

In my experience, cloud providers’ generous free tiers and managed services dramatically reduce both upfront costs and ongoing operational overhead.

Cloud Free Tier Opportunities I Use:

Recent industry analysis shows that organizations increasingly leverage cloud-based solutions for cost-effective analytics. The free tiers I regularly utilize include:

- AWS Kinesis: 1 million PUT records free monthly

- Google Pub/Sub: 10GB of messages free monthly

- Azure Event Hubs: 1 million events free monthly

- BigQuery: 1TB of queries and 10GB of storage free monthly

These allowances support significant real-time workloads before triggering charges, making them perfect for proof-of-concept development.

Step 5: Implement Ongoing Cost Optimization

Real-time systems can accumulate costs quickly if left unmonitored. I implement proactive cost management that combines automated monitoring with regular manual reviews.

Essential Practices I Always Implement:

Data Retention Optimization:

- Hot storage (1-7 days): Recent data in fast, expensive storage

- Warm storage (8-90 days): Older data in cheaper storage

- Cold storage (90+ days): Long-term archival in object storage

According to market research, 63% of organizations expect to increase their spending on business intelligence and data analytics, making cost optimization crucial for sustainable growth.

Query Performance Optimization:

- Pre-aggregate common metrics during ingestion

- Implement caching layers for repetitive queries

- Use materialized views for frequently accessed data

- Regular performance reviews to optimize costly operations

Real-World Success Story: How I Helped a Retailer Save $200K

Let me share a recent success story that perfectly illustrates these principles in action, similar to how we’ve helped companies like BJJ Fanatics improve conversion rates across multiple brands.

A regional retail chain with 15 locations came to me with critical inventory visibility challenges costing significant revenue during peak seasons. Their legacy system updated inventory only twice daily, leading to stockouts of popular items while overstock tied up working capital.

The Challenge I Discovered:

During my assessment, I found that delayed inventory visibility cost an estimated $75,000 in lost revenue per quarter. Manual inventory checks consumed 15 hours weekly across store management teams.

“We knew we had a problem, but we didn’t realize the true cost until SR Analytics showed us the numbers,” the operations manager told me. “The real-time solution they implemented not only solved our stockout issues but actually improved our overall inventory management strategy.”

My Budget-Friendly Solution:

I implemented a real-time solution using entirely managed cloud services:

- Data ingestion: POS systems connected via webhooks to AWS Kinesis ($45/month)

- Stream processing: AWS Lambda functions for real-time calculations ($35/month)

- Database: TimescaleDB cloud instance for time analytics storage ($80/month)

- Visualization: Grafana dashboards with automated alerting ($0 – open source)

- Monitoring: CloudWatch for system health and cost tracking ($15/month)

Total monthly cost: $175 plus minimal data transfer fees.

Results I Achieved:

- 20% reduction in stockouts during the holiday season

- $45,000 prevented revenue loss in the first quarter

- 15 hours weekly time savings across store management teams

- ROI of 1,850% in the first year

This success story demonstrates how strategic implementation of small business analytics tools can deliver enterprise-grade results without enterprise-level costs.

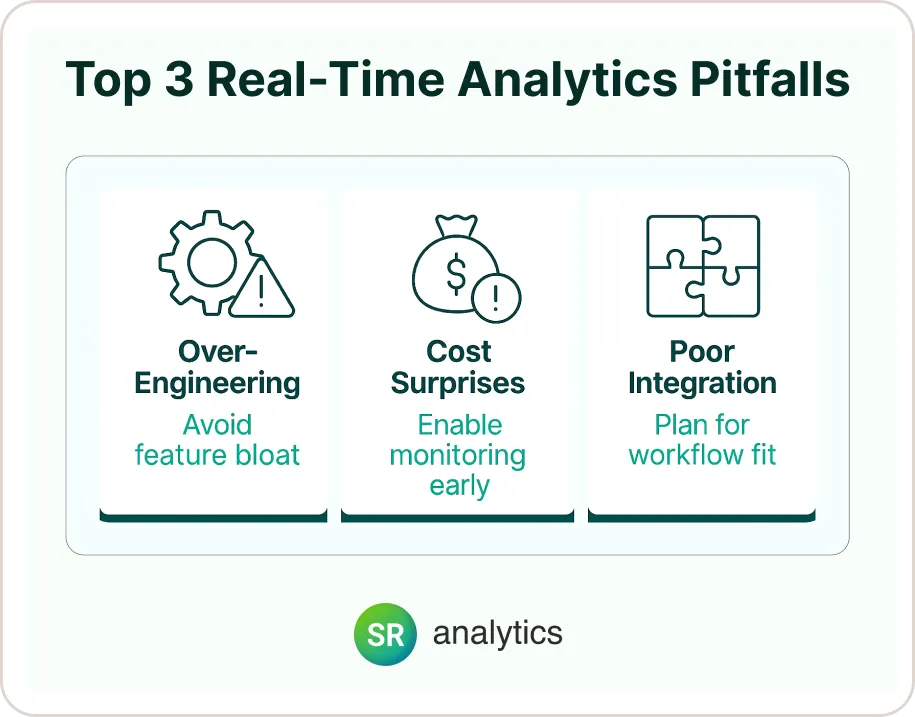

Common Implementation Pitfalls I’ve Learned to Avoid

After guiding numerous implementations, I’ve identified recurring mistakes that can derail both timelines and budgets.

Pitfall 1: Over-Engineering Initial Solutions I see teams attempt comprehensive platforms from day one rather than solving specific problems incrementally.

My approach: Start with minimal viable solutions that address highest-priority use cases. Resist feature creep and focus on proving business value first.

Pitfall 2: Inadequate Cost Monitoring The consumption-based pricing model can lead to cost surprises if usage patterns aren’t properly monitored from day one.

My solution: I implement cost monitoring and alerting before processing any production data, with conservative budgets that scale based on proven value.

Pitfall 3: Ignoring Integration Requirements Teams often focus on individual tools without considering how they’ll integrate with existing systems and workflows.

My approach: I always assess current Google Analytics consulting services implementations and other analytics tools to ensure seamless integration.

Making the Decision: Is This Right for You?

Based on my experience implementing cost-effective real time analytics database solutions across different industries, here’s my honest assessment of when these approaches make sense.

Budget-friendly real-time analytics is likely a good fit if:

- You currently rely on daily or weekly reports for time-sensitive decisions

- Your business has clear use cases where faster insights drive measurable value

- You’re comfortable with cloud-based managed services

- Your team can dedicate time to learning new tools and processes

I recommend traditional approaches if:

- Your decision-making timelines don’t require sub-hour response times

- You have significant regulatory requirements for on-premises data processing

- Budget constraints require completely predictable monthly costs

- You’re heavily invested in existing analytics tools that meet current needs

Consider a phased approach if:

- You want to test real-time capabilities without full organizational commitment

- Your team needs time to develop new skills gradually

- Budget approval for full implementation will take time

According to industry forecasts, organizations that combine real-time analytics with AI and machine learning capabilities will gain the most competitive advantage in 2025.

Transform Your Analytics Strategy Without Breaking the Bank

In 2025, the most successful real-time analytics initiatives aren’t driven by the biggest budgets—they’re driven by clarity, strategic focus, and smart architectural decisions. Businesses that prioritize value-driven use cases and lean implementation often achieve more meaningful outcomes at a fraction of the traditional cost.

Yet navigating streaming technologies, tool selection, and cost management can be complex—especially without dedicated expertise. That’s where experienced data analytics consulting services can provide clarity, helping organizations align their technical efforts with real business goals.

If you’re considering real-time capabilities or refining existing systems, a structured, ROI-first approach can help you avoid common pitfalls and deliver real business impact.