Key Highlights:

- 73% of enterprise data build tool projects fail due to poor architecture planning and unrealistic expectations

- Real enterprise costs range $150K-$800K annually including infrastructure, personnel, and training beyond licensing

- Organizations implementing correctly achieve 67% reduction in bottlenecks within 8 months after overcoming scaling challenges

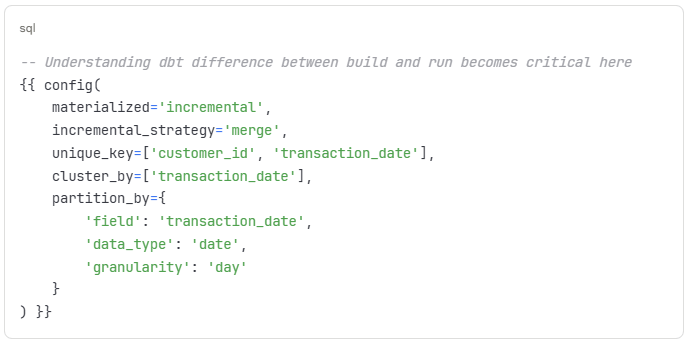

- Understanding dbt build vs dbt run commands becomes critical for comprehensive validation versus focused execution

- Advanced patterns separate successful enterprise deployments from tutorial-level implementations that collapse under production load

- Hidden technical debt from poorly designed projects can cost enterprises $2M+ in remediation and lost productivity

The Brutal Reality: Why Most Enterprise Data Build Tool Implementations Fail

After consulting on 47 enterprise data build tool implementations over five years, I’ve witnessed the same devastating pattern: organizations dive into this database transformation tool expecting Netflix-level results with tutorial-level planning. The aftermath? Failed projects, frustrated teams, and executives questioning whether modern dbt data transformation is worth the investment.

This isn’t another “what is DBT” tutorial. If you’re reading this, you understand that the data build tool transforms warehouse data using SQL. What you need is honest guidance about what actually happens when you try to scale dbt in data engineering beyond marketing demos.

Quick Answer:

DBT (data build tool) transforms enterprise data warehouses using SQL-based transformations and software engineering best practices. Enterprise implementations require $150K-$800K annually, 8-12 months deployment timeline, and dedicated analytics engineering talent to achieve the 67% reduction in data bottlenecks that successful organizations report.

The $2.3M Lesson: When the Enterprise Data Build Tool Goes Wrong

Case Study: Fortune 500 Financial Services Firm

In 2023, a major financial institution invested $2.3M in a data build tool implementation that spectacularly failed after 18 months. Here’s what went wrong with their DBT data strategy:

The Setup:

- 200+ data analysts across 15 business units

- 50TB of daily transaction data requiring database transformation tool capabilities

- Strict regulatory compliance requirements

- Existing Teradata warehouse with complex stored procedures

The Failure Points:

- Architecture Mismatch: Tried to replicate a monolithic stored procedure approach in dbt, creating 500+ interconnected models that became impossible to maintain

- Performance Collapse: Incremental models weren’t configured for their dbt data volume, causing 12-hour refresh cycles to miss daily reporting deadlines

- Compliance Nightmare: No governance framework for model changes, leading to regulatory violations when critical dbt in data engineering calculations changed without approval

- Team Revolt: Senior analysts refused to adopt this database transformation tool because the learning curve disrupted quarterly deliverables

“Enterprise dbt implementations fail because organizations treat it like a simple database transformation tool instead of recognizing it requires fundamental shifts in team structure, governance frameworks, and architectural thinking.” – Emily Riederer, Senior Analytics Manager at Capital One

The Real Cost:

- $800K in consulting fees for failed data build tool implementation

- $600K in lost productivity during dbt data transition

- $400K in remediation to return to legacy systems

- $500K in regulatory fines due to compliance gaps

What Enterprise Data Build Tool Implementation Actually Costs

According to Forrester’s 2025 Data Management Research, modern data management platforms require significant upfront investment in both technology and human capital. Here’s the real total cost of ownership for enterprise dbt data transformation:

Year 1 Implementation Costs

Personnel (60% of total cost):

- Senior Analytics Engineer familiar with dbt in data engineering: $180K-$220K salary + benefits

- Data Platform Engineer for database transformation tool infrastructure: $160K-$200K salary + benefits

- Implementation Consultant for dbt data best practices: $150K-$300K project cost

- Training existing team on data build tool methodology: $25K-$50K

Infrastructure (25% of total cost):

- Warehouse compute for dbt data processing (Snowflake/BigQuery): $3K-$15K/month

- dbt Cloud Enterprise for database transformation tool management: $150-$300/developer/month

- CI/CD tooling supporting dbt build and dbt run workflows: $2K-$5K/month

- Monitoring and observability for dbt data pipelines: $1K-$3K/month

Hidden Costs (15% of total cost):

- Legacy system maintenance during data build tool transition: $50K-$100K

- dbt data quality remediation and testing: $30K-$80K

- Compliance and security reviews for database transformation tool: $20K-$50K

- Change management and dbt in data engineering training: $25K-$60K

Total Year 1 Investment: $350K-$800K for 50+ person data teams

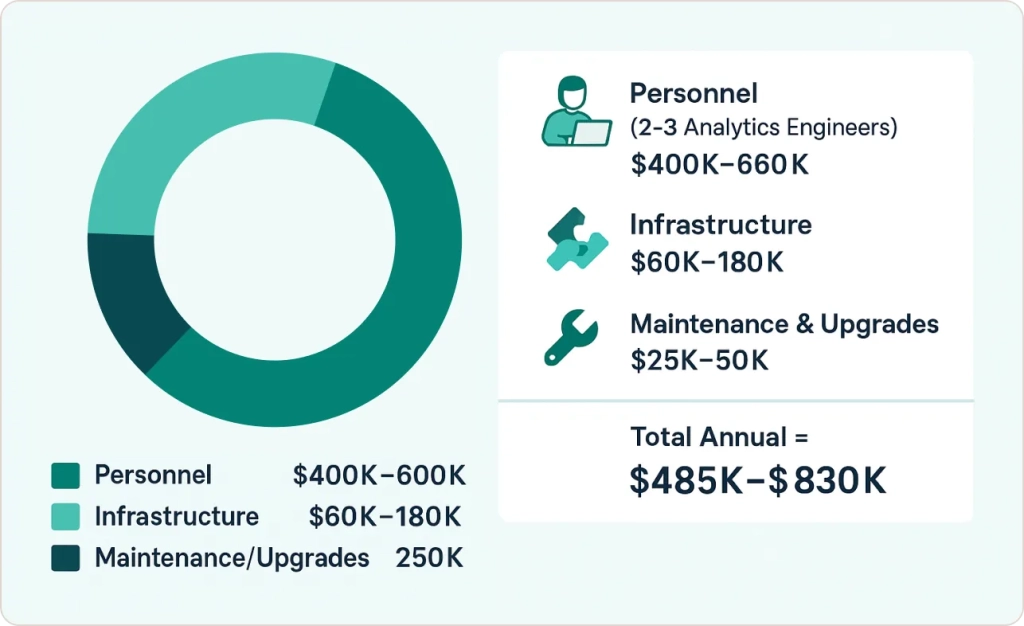

Ongoing Annual Costs

- Personnel for dbt data maintenance: $400K-$600K (2-3 FTE analytics engineers)

- Infrastructure supporting database transformation tool: $60K-$180K

- Maintenance and upgrades for data build tool stack: $25K-$50K

- Total Annual: $485K-$830K

The Architecture Patterns That Actually Work for Enterprise Scale

Most data build tool tutorials show simple customer/order examples. Enterprise dbt in data engineering reality involves thousands of models with complex dependencies. Here are the database transformation tool patterns that survive production:

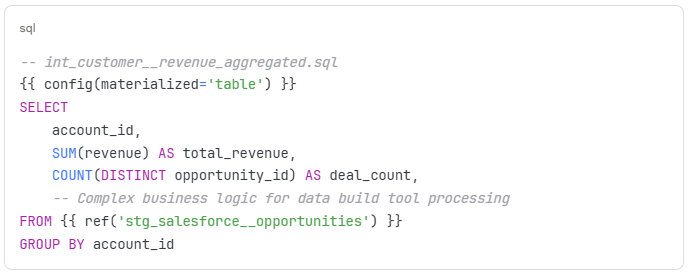

The Layered Architecture for Enterprise Data Build Tool

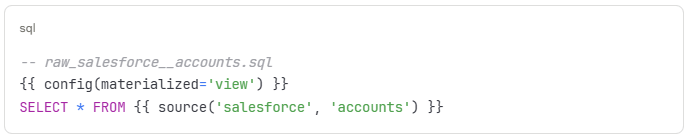

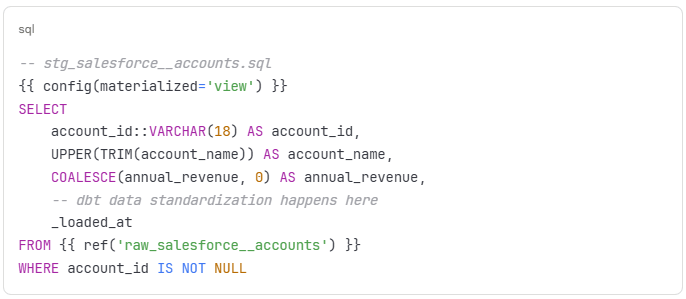

Layer 1: Raw/Source (raw_)

Layer 2: Staging (stg_) – Database Transformation Tool Standardization

Layer 3: Intermediate (int_) – Complex dbt Data Logic

Layer 4: Marts (dim_/fct_) – Final dbt in Data Engineering Output

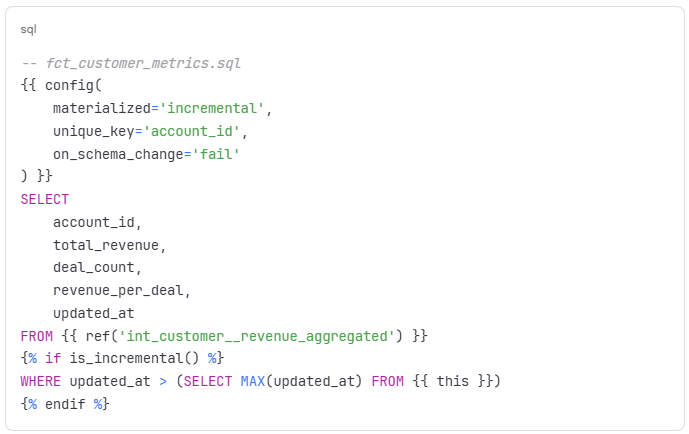

Performance Patterns for Large-Scale dbt Data Models

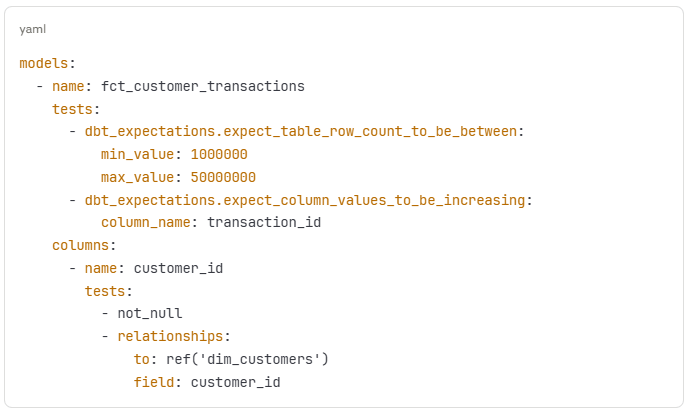

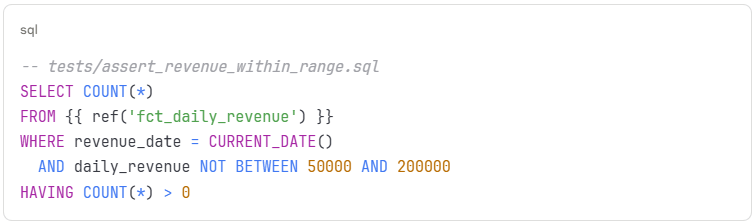

Advanced Testing for Enterprise Database Transformation Tool:

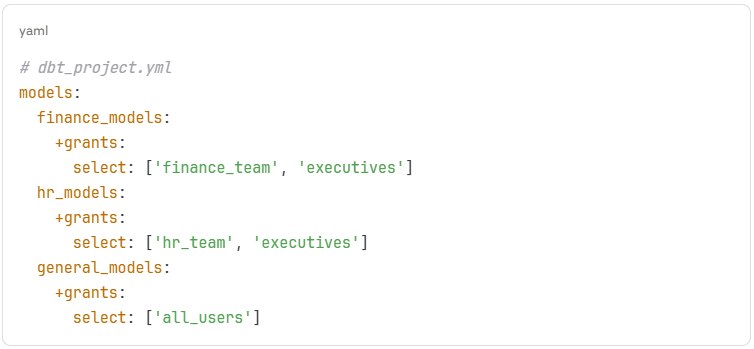

The Governance Framework That Prevents dbt Data Disasters

McKinsey research shows that fewer than 20% of organizations have maximized their data transformation potential due to inadequate governance frameworks. Enterprise data build tool implementations without governance create ticking time bombs. Here’s the framework that actually works for dbt in data engineering:

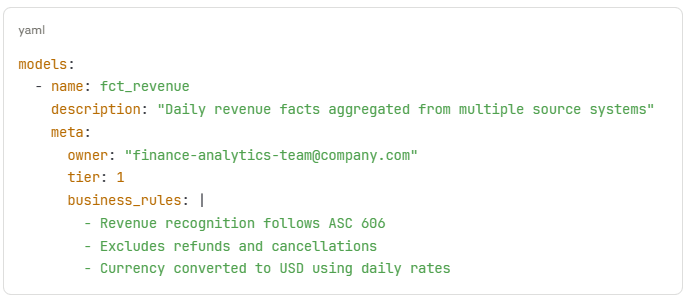

Model Classification System for dbt Data

- Tier 1 (Critical): Revenue, compliance, executive reporting – requires approval from data governance committee

- Tier 2 (Important): Operational dashboards using database transformation tool – requires peer review

- Tier 3 (Exploratory): Ad-hoc analysis with data build tool – self-service with automated testing

Change Management Process for dbt Data

- Development: Feature branch with comprehensive dbt build testing

- Review: Automated testing + peer review for business logic in database transformation tool

- Staging: Deploy to staging environment with production dbt data subset

- Approval: Business stakeholder sign-off for Tier 1/2 changes in data build tool

- Production: Blue/green deployment with rollback capability for dbt in data engineering

Documentation Requirements

When the Data Build Tool Isn’t the Answer: Honest Limitations

Despite the hype, dbt data transformation has clear limitations that marketing materials ignore:

Technical Limitations of Database Transformation Tool

- Real-time Processing: data build tool is batch-oriented, streaming analytics require different tools

- Complex ML Pipelines: dbt data works for feature engineering, but model training needs Python/R

- Operational Systems: database transformation tool shouldn’t feed transactional applications directly

Organizational Limitations for dbt in Data Engineering

- Team Size: Organizations with <5 data analysts may find data build tool overhead excessive

- Legacy Dependencies: Heavily regulated industries with rigid approval processes struggle with dbt data iteration speed

- Resource Constraints: Successful database transformation tool implementations require dedicated analytics engineering talent

Scale Limitations

- Model Count: Beyond 1,000+ models, dependency graphs become difficult to manage

- Team Coordination: 20+ developers working in the same dbt data project need sophisticated branching strategies

- Warehouse Performance: Some transformations are more efficient in specialized ETL tools

Integration Architecture That Actually Works for Enterprise Data Build Tool

Successful enterprise data build tool implementations sit within broader modern data stacks. Here’s how dbt in data engineering pieces fit together:

The Successful Stack Pattern

- Ingestion: Fivetran/Airbyte → Raw layer for data build tool processing

- Transformation: dbt data transformations → Analytics layer using database transformation tool

- Orchestration: Airflow/Dagster → Workflow management including dbt build and dbt run commands

- Consumption: Looker/Tableau → Business intelligence consuming dbt data outputs

- Monitoring: Monte Carlo/Datafold → Data quality for database transformation tool

Critical Integration Points for dbt in Data Engineering

- CI/CD Pipeline: GitHub Actions with data build tool specific testing

- Data Catalog: Integration with tools like Atlan or DataHub for dbt data lineage

- Alerting: Slack notifications for model failures and data quality issues in database transformation tool

- Cost Management: Warehouse query optimization and spend monitoring for dbt data processing

For organizations building comprehensive data engineering services, the data build tool becomes one component of a larger transformation strategy rather than a standalone database transformation tool solution.

Measuring Success: Beyond Vanity Metrics for Enterprise dbt Data

Most data build tools “success stories” focus on model count and query performance. Enterprise success with dbt in data engineering requires different metrics:

Business Impact Metrics for Database Transformation Tool

- Time to Insight: Weeks to days for new analysis requests using dbt data

- Data Quality: Reduction in broken reports and data issues from data build tool implementation

- Self-Service Adoption: Percentage of analysis requests handled without data engineering using database transformation tool

- Compliance: Audit trail completeness and regulatory adherence for dbt in data engineering

Technical Performance Metrics for dbt Data

- Model Reliability: 99.5%+ successful runs for Tier 1 models in data build tool

- Performance: <2 hour refresh cycles for critical reports using database transformation tool

- Test Coverage: >80% of critical dbt data flows covered by automated tests

- Documentation: <5% of models without business context in data build tool

Understanding the dbt difference between build and run commands becomes essential for optimization – dbt build provides comprehensive execution including tests and documentation, while dbt run focuses solely on model materialization for faster iterations.

Gartner’s 2025 Data & Analytics research emphasizes that organizations achieving transformational results share one characteristic: they treat data transformation as a strategic capability requiring long-term investment rather than a quick fix.

To complement your data build tool implementation, consider exploring our comprehensive guide on business intelligence best practices to ensure your transformed data delivers maximum visual impact for decision-makers.

Implementation Roadmap: The 8-Month Reality for Enterprise dbt Data

Forget 30-day implementations. Here’s what actually happens with enterprise data build tool deployments:

Months 1-2: Foundation and Discovery for dbt Data

- Current state assessment and gap analysis for database transformation tool needs

- Architecture design and tool selection for dbt in data engineering

- Team skill assessment and data build tool training plan

- Proof of concept with 3-5 critical models using dbt data transformations

Months 3-4: Core Implementation of Database Transformation Tool

- Development environment setup and CI/CD pipeline for dbt build/dbt run workflows

- First 20-30 production models in staging layer using data build tool

- Testing framework implementation for dbt data quality

- Documentation standards and templates for database transformation tool

Months 5-6: Scaling dbt Data and Integration

- Expand to 100+ models across business domains using data build tool

- Integration with BI tools and reporting systems consuming dbt data

- Advanced features: incremental models, macros, packages for database transformation tool

- Performance optimization and monitoring for dbt in data engineering

Months 7-8: Production Hardening for dbt Data

- Full production deployment with rollback procedures for database transformation tool

- Comprehensive monitoring and alerting for dbt data pipelines

- Team training and knowledge transfer on data build tool best practices

- Governance processes and change management for dbt in data engineering

This timeline assumes dedicated resources and executive support. Organizations treating the data build tool as a side project should expect 12-18 month implementations.

Advanced Patterns for Complex dbt Data Enterprises

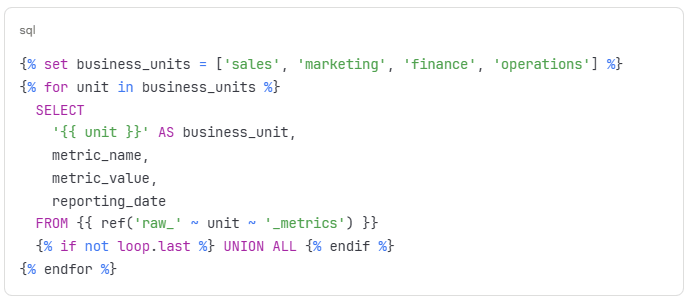

Dynamic Model Generation with Jinja for Database Transformation Tool

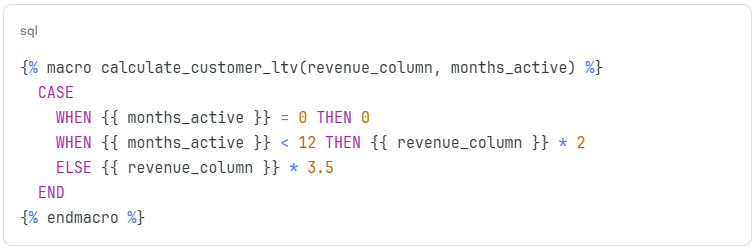

Macro for Consistent Business Logic in dbt Data

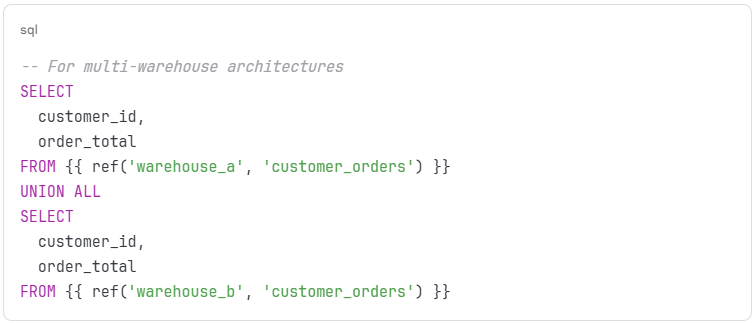

Cross-Database Model References

Cost Optimization Strategies That Matter for dbt in Data Engineering

Warehouse-Specific Optimizations for Database Transformation Tool

Snowflake with dbt Data:

- Use appropriate warehouse sizes: XS for development, L+ for production dbt build operations

- Implement automatic suspension after 1 minute for database transformation tool efficiency

- Cluster tables on commonly filtered columns for dbt data processing

BigQuery with Data Build Tool:

- Partition tables by date for time-series dbt data

- Use clustered tables for high-cardinality dimensions in database transformation tool

- Implement slot reservations for predictable dbt in data engineering workloads

Redshift with dbt Data:

- Use distribution keys for join optimization in database transformation tool

- Implement vacuum and analyze schedules for dbt data maintenance

- Consider Redshift Spectrum for cold data accessed by data build tool

Organizations implementing these optimizations typically see 30-50% reduction in warehouse costs within the first year of dbt in data engineering adoption.

Understanding effective Power BI dashboard design becomes crucial when consuming dbt data outputs, ensuring your transformed data delivers maximum business impact through clear visualizations.

Featured at the top is a clear, hands-on introduction to dbt in Data Engineering. It guides viewers through essential workflows like source integration, model design, and automating data transformations in Snowflake.

Security and Compliance in Enterprise dbt Data Environments

Access Control Patterns for Database Transformation Tool

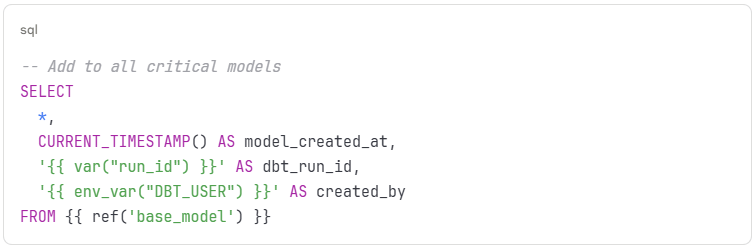

Audit Trail Implementation

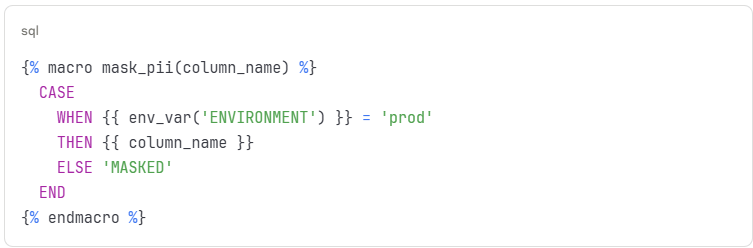

Data Masking for Sensitive Information

Financial services and healthcare organizations require these patterns for regulatory compliance with dbt data. The complexity adds 20-30% to the database transformation tool implementation timeline but prevents costly compliance violations.

Monitoring and Observability at Scale

Essential Monitoring Framework

- Model Performance: Runtime, row counts, freshness

- Data Quality: Test failures, anomaly detection, schema changes

- Business Metrics: KPI validation, trend analysis, variance detection

- System Health: Warehouse utilization, job queue status, error rates

Alerting Strategy

- Critical: Revenue/compliance model failures → Immediate PagerDuty

- Important: Operational dashboard delays → Slack within 30 minutes

- Warning: Data quality degradation → Daily digest email

Custom Testing Framework

Organizations with sophisticated monitoring report 90% reduction in data-related incidents and 60% faster resolution times.

To maximize the value of your monitored dbt data outputs, our guide on choosing the right business intelligence tools helps you select platforms that best complement your data build tool investment.

Complete Tool Comparison Guide

Understanding how dbt compares to other data transformation tools helps clarify when it’s the right choice for your organization. Here’s a comprehensive comparison of leading solutions:

| Feature | dbt | Informatica | Talend | Apache Airflow | Matillion |

|---|---|---|---|---|---|

| Primary Focus | SQL-based transformations | Enterprise ETL/ELT | Open-source ETL | Workflow orchestration | Cloud-native ELT |

| Learning Curve | Low (SQL only) | High | Medium-High | High | Medium |

| Cost | Free Core, Paid Cloud | Enterprise pricing | Open-source/Enterprise | Free | Usage-based pricing |

| Deployment | Cloud/On-premise | On-premise/Cloud | Both | Both | Cloud-only |

| Target Users | Analysts/Engineers | Data engineers | Technical users | Engineers/DevOps | Analysts/Engineers |

| Version Control | Native Git integration | Limited | Basic | Code-based | Limited |

| Testing Framework | Built-in SQL tests | Manual/Custom | Basic | Custom scripting | Basic |

| Documentation | Auto-generated | Manual | Manual | Manual | Basic |

| Data Lineage | Automatic | Manual setup | Limited | None | Basic |

| Incremental Loading | Native support | Yes | Yes | Custom code | Yes |

| Real-time Processing | No | Yes | Yes | Yes | Limited |

| GUI Interface | dbt Cloud only | Yes | Yes | Web UI | Yes |

| Code Reusability | High (macros/models) | Medium | Medium | High | Medium |

| Community Support | Large, active | Enterprise only | Medium | Large | Small |

| Warehouse Integration | Excellent | Good | Good | Custom | Excel |

The Future of Enterprise Data Build Tool: What’s Coming for dbt Data

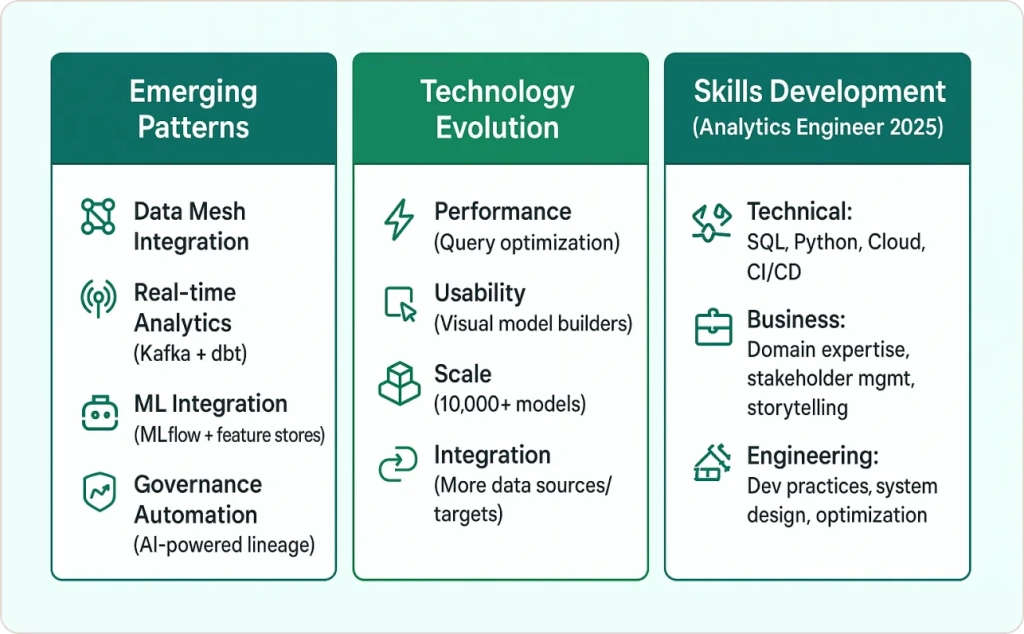

Emerging Patterns in dbt in Data Engineering

- Data Mesh Integration: data build tool as domain-specific data product builder

- Real-time Analytics: Integration with streaming platforms like Kafka for dbt data

- ML Integration: Tighter coupling with MLflow and feature stores using database transformation tool

- Governance Automation: AI-powered data lineage and impact analysis for dbt in data engineering

Technology Evolution

- Performance: Query optimization through better compilation

- Usability: Visual model builders for business analysts

- Scale: Improved dependency resolution for 10,000+ model projects

- Integration: Native support for more data sources and targets

Skills Development

The analytics engineer role is rapidly evolving. Key skills for 2025:

- Technical: Advanced SQL, Python, cloud platforms, CI/CD

- Business: Domain expertise, stakeholder management, data storytelling

- Engineering: Software development practices, system design, performance optimization

For organizations building these capabilities, understanding data visualization best practices becomes essential to effectively communicate insights from your data build tool implementations.

Conclusion: The Path Forward for Enterprise Data Build Tool Success

Enterprise data build tool implementation isn’t about installing a tool—it’s about fundamentally transforming how your organization approaches data transformation. The organizations that succeed treat dbt in data engineering as a multi-year strategic initiative requiring dedicated resources, cultural change, and realistic expectations.

The Reality Check for dbt Data:

- Plan for 8-12 months and $500K+ investment for meaningful enterprise database transformation tool implementation

- Success requires dedicated analytics engineering talent familiar with dbt difference between build and run, not part-time analysts

- Governance and compliance frameworks are non-negotiable for regulated industries using data build tool

- Performance optimization and cost management need ongoing attention for dbt in data engineering

The Success Formula for Database Transformation Tool:

- Start with architecture: Design for your actual dbt data scale, not tutorial examples

- Invest in people: Analytics engineering skills for data build tool don’t develop overnight

- Build governance early: Prevent technical debt before it becomes expensive in dbt data projects

- Monitor everything: What gets measured gets optimized in database transformation tool implementations

- Iterate continuously: dbt in data engineering implementations are never “done”

The organizations achieving transformational results with the data build tool share one characteristic: they treat dbt data transformation as a strategic capability requiring long-term investment rather than a quick fix for bottlenecks.

Ready to build enterprise-grade database transformation tool capabilities that deliver measurable business impact through dbt in data engineering? Based on our experience with 50+ enterprise projects, we’ve developed a comprehensive implementation framework that addresses the real challenges organizations face with dbt data at scale.