Introduction

Large language models (LLMs) like GPT-4, Claude, and LLaMA 2 are increasingly making their way into the business analytics world. From report summarization to simplifying the way we query data, the capabilities of LLMs are transforming how we interact with information.

With their ability to process natural language and generate insights, they offer businesses a chance to democratize analytics, allowing non-technical users to tap into data without needing a deep understanding of complex tools.

The appeal is clear. Imagine being able to type a question in plain English and receive a meaningful data-driven answer. No more waiting for data teams to run custom queries or create complicated reports.

But while the potential of LLMs is exciting, it’s important to recognize that their integration into business workflows isn’t without risks. The ability to access and analyze data comes with significant responsibilities—particularly around issues like data privacy, compliance, and the accuracy of insights generated by these models.

In this blog, we’ll walk through the benefits of LLMs in analytics, highlight common risks, and provide you with a structured approach to integrating these tools securely and responsibly.

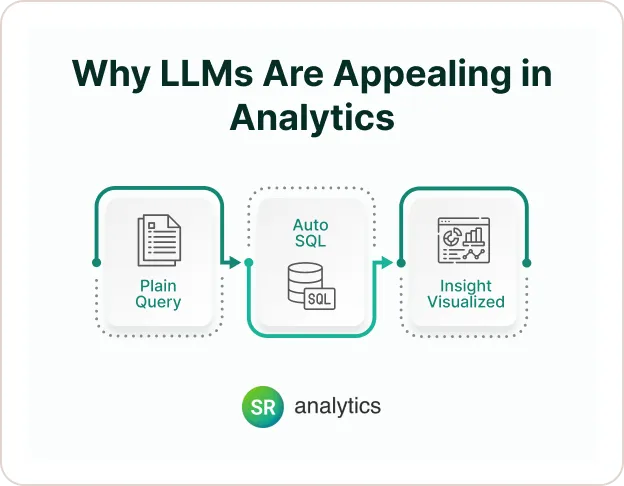

Why LLMs Are Appealing in Analytics

In today’s fast-paced business world, agility is key. LLMs promise to streamline workflows and make data more accessible. If you’re a business owner, analyst, or decision-maker, the use of LLMs could significantly impact the efficiency of your operations.

LLMs unlock several capabilities such as natural language to SQL, automated documentation, and summarization. These use cases align closely with trends in Business Intelligence and Analytics for 2025, where ease of data access and insights delivery are top priorities.

So, what makes LLMs so appealing in analytics?

LLMs are trained on a vast corpora of text and can understand and generate human-like responses. In the context of data analytics, this unlocks several capabilities:

1. Natural Language to SQL

One of the most exciting capabilities of LLMs is their ability to translate natural language queries into SQL. For instance, imagine a business user who doesn’t know SQL but needs to generate a report or analyze a dataset.

With an LLM, they can simply type their request in plain language—“Show me the sales trend for the last quarter”—and the model will automatically generate the corresponding SQL query.

This significantly reduces the need for specialized technical skills and makes data analysis more accessible to a broader audience.

It’s like having a virtual assistant that speaks SQL fluently, helping your team unlock the insights they need without relying on your data engineers or analysts to build every query.

This capability is transforming how companies approach data—enabling faster, more agile decision-making.

2. Automated Documentation

Documentation is one of those tasks that often gets overlooked but is crucial for maintaining consistency and transparency in data processes. LLMs can help by automatically generating documentation for data models, tables, and even dashboards.

This ensures that everyone, from analysts to business users, can understand the structure of your data and the logic behind the insights being presented.

Imagine you’re launching a new dataset or dashboard. Instead of spending hours documenting it manually, you can use LLMs to generate clean, professional descriptions of what each data model represents.

This allows your team to spend more time analyzing data and less time on administrative tasks. This, in turn, improves the overall efficiency of your organization and helps teams align quickly on the structure and meaning of data.

3. Summarization and Explanation

LLMs can also help explain complex data points in a way that’s easy to understand for non-technical stakeholders. For example, if a chart shows a dip in sales for a specific region, an LLM can generate a summary that explains what the chart shows, why the dip might be occurring, and potentially even suggest actionable next steps.

This capability is especially beneficial for teams that need to present insights to decision-makers who might not have the technical background to interpret raw data.

With LLMs, you can break down the data into digestible, business-friendly language. Imagine how much faster your team could act if they didn’t have to manually interpret charts or wait for a data expert to explain findings!

The applications of LLMs in business analytics are vast, but as with any powerful tool, they come with their own set of challenges. Let’s dive into the risks you should be aware of when integrating LLMs into your workflows.

These applications are especially useful in organizations where business users frequently interact with data and need self-service access to insights.

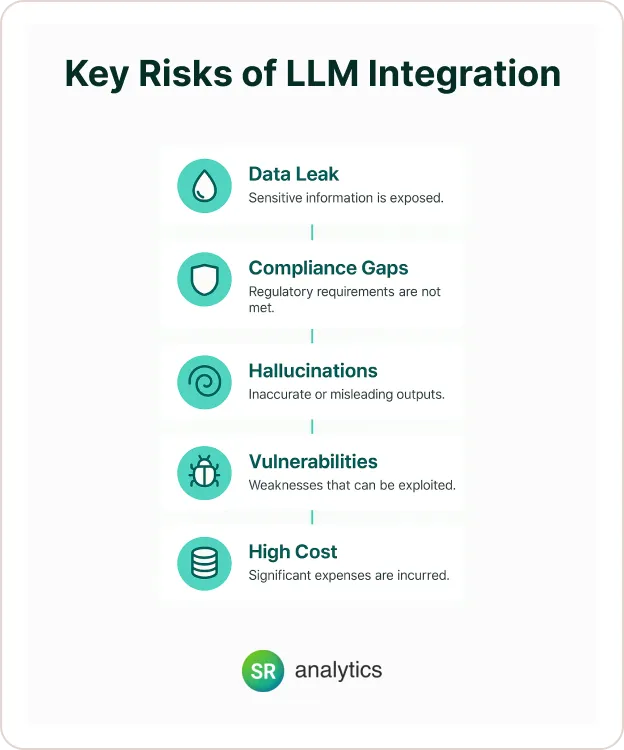

Key Risks of LLM Integration

While LLMs offer many benefits, there are also some inherent risks that come with their integration. These risks, if not properly managed, can have serious consequences for your business.

Let’s take a look at the top concerns you should keep in mind:

1. Data Privacy and Leakage

When using LLMs, one of the biggest concerns is data privacy. Many LLM services—particularly free or publicly accessible ones—store your input data for model training or improvement purposes.

This means your sensitive business data could be at risk if it’s shared with a third-party model. Even enterprise-level offerings may still have data retention practices that require careful scrutiny.

To ensure data privacy, it’s essential to carefully review the data handling and retention policies of the LLM provider you choose. If you’re working with sensitive data, consider using self-hosted LLMs or enterprise solutions that give you greater control over how data is processed and stored.

Always opt for providers that clearly outline how they handle data, and check for compliance with privacy regulations.

OpenAI’s documentation confirms that enterprise offerings provide more control over data retention, but caution is still necessary.

2. Regulatory Non-Compliance

If your organization handles personal or sensitive data, you need to be particularly cautious. Privacy regulations like GDPR, CCPA, or HIPAA impose strict guidelines on how data must be handled and processed.

If an LLM interacts with this kind of data without the proper safeguards in place, you could be putting your business at risk of fines or legal consequences.

The risks are amplified when using third-party LLM APIs, which may process and store data in jurisdictions that do not meet your regulatory requirements. It’s essential to ensure that any LLM you integrate into your analytics workflows complies with all relevant privacy laws.

Using cloud-based models that guarantee compliance with industry standards can provide a level of security, but always confirm the provider’s compliance practices.

3. Hallucinations and Inaccuracy

“Hallucinations” are a well-known problem in the world of LLMs. These occur when the model generates an output that sounds plausible but is factually incorrect.

For example, an LLM might generate a business insight that seems logical but is based on inaccurate or incomplete data.

In the context of analytics, hallucinations can be dangerous. Incorrect insights could lead decision-makers down the wrong path, potentially causing financial losses or strategic missteps.

That’s why it’s critical to implement quality controls and have humans review outputs before they are acted upon. Validation processes such as double-checking model outputs and cross-referencing insights with existing data can help minimize this risk.

4. Security Vulnerabilities

LLMs are susceptible to certain security vulnerabilities, such as prompt injection attacks. In these attacks, a malicious actor inputs carefully crafted prompts to manipulate the model’s behavior, potentially gaining unauthorized access to sensitive data or causing the model to generate harmful outputs.

To minimize the risk of such attacks, it’s essential to implement robust security practices, including input validation, content filtering, and regular model audits.

These steps will help protect your data and prevent malicious actors from exploiting the model. Using a layered approach to security—such as combining encryption with access controls—can ensure that your LLM integration remains secure.

The OWASP Foundation has identified prompt injection and unauthorized access as among the top vulnerabilities in LLM applications.

5. Cost and Resource Consumption

While the benefits of LLMs are significant, they are also resource-intensive. Running large models requires substantial computing power, and when scaling these models to handle large datasets or high query volumes, costs can add up quickly.

The cost of LLM deployments can be especially high when using GPU-based inference or paying for token usage. For businesses with limited budgets, these costs can become a barrier to widespread adoption.

It’s important to carefully assess the financial impact and scalability of your LLM deployment before moving forward. To mitigate costs, consider using a hybrid deployment model or optimizing model queries to reduce token consumption.

Data privacy, regulatory compliance, hallucinations, and security vulnerabilities are real concerns. For businesses in sensitive sectors like Healthcare or Financial Services, ensuring compliant LLM usage is critical.

Recommended Framework for Safe LLM Integration

Now that we’ve covered the risks, let’s talk about how you can safely integrate LLMs into your analytics workflow.

A structured, phased approach will help you balance the power of LLMs with the need for secure, compliant use. Here’s a framework to guide your implementation:

1. Define Use Cases with Risk Awareness

The first step is to choose appropriate use cases that won’t expose sensitive data to unnecessary risks. Start by identifying tasks that involve non-sensitive data, such as generating SQL queries, summarizing reports, or drafting documentation.

Avoid using LLMs for tasks that involve personally identifiable information (PII) or confidential business data in the initial stages.

By starting small, you can gain confidence in the model’s capabilities and understand how to manage its use in a controlled environment.

This will help you build a solid foundation for scaling up in the future.

Tip: “Start small: use LLMs for drafting documentation or summarizing reports. For broader analytics automation, consider these Data Cleaning Automation Hacks to streamline your data workflows first.”

2. Choose the Right Deployment Model

LLMs can be deployed in several ways, each with different implications for data security:

| Deployment Model | Data Privacy | Complexity | Cost |

|---|---|---|---|

| Public APIs (e.g., ChatGPT) | Low | Low | Low |

| Enterprise APIs (e.g., Azure OpenAI, OpenAI Enterprise) | Medium–High | Medium | Medium |

| Self-hosted open-source LLMs (e.g., LLaMA 2, Falcon) | High | High | High |

Organizations requiring maximum control should consider deploying open-source LLMs in private environments. Those seeking ease of use with acceptable risk may opt for enterprise-grade cloud services, which offer encryption and data handling guarantees.

3. Implement Architectural Safeguards

When integrating LLMs, never allow direct connections between your production databases and the model.

Instead, use methods like sandboxing, read-only pipelines, or Retrieval-Augmented Generation (RAG) to ensure that the model only has access to safe, pre-approved data.

For instance, with RAG, the model can query a vector database and generate insights based on approved context, rather than accessing your raw database.

This keeps your sensitive data protected while still enabling the model to provide valuable insights.

Tip: To implement such methods, frameworks like LangChain and LlamaIndex are commonly used.

4. Apply Prompt and Output Controls

Controlling how the model interacts with data is essential for ensuring safe outputs. Use prompt engineering to guide the model’s responses, ensuring they adhere to predefined rules.

Prompt engineering helps guide the model to behave within constraints. For example:

“You are a business assistant. Do not provide personally identifiable information.”

Additionally, apply content moderation tools to filter out potentially harmful or sensitive information.

Tip:

In addition to system-level prompts, organizations should implement content moderation and filtering. Tools like Guardrails AI validate output structure and adherence to rules.

Microsoft’s Presidio library can be used to detect and remove sensitive information from prompts or responses.

5. Educate Users and Set Guidelines

One of the most important steps is educating your team on the proper use of LLMs. This includes explaining which tools are approved for use, what types of data can be shared, and how to validate the outputs generated by the models.

Misuse of LLMs—especially when sharing sensitive data—can be a major risk.

Tip: “Internal misuse is one of the biggest threats. Make sure your team understands which tools are safe to use and how to handle data responsibly.”

6. Monitor, Audit, and Improve

After deployment, continuous monitoring is critical. Track model outputs, look for hallucinations, and ensure that the model is working as expected.

Tools such as Arize AI and WhyLabs offer observability features specific to LLMs, helping track performance and anomalies.

Tip: “Ongoing monitoring will help you spot issues before they become major problems. Use these insights to refine and improve your model’s performance.”

Final Considerations

LLMs are a powerful tool that can transform your analytics workflow, improving efficiency, democratizing access to insights, and accelerating decision-making.

But integrating these models comes with risks that must be carefully managed.

By following a structured framework—defining use cases, choosing the right deployment model, applying safeguards, educating your team, and continuously monitoring the system—you can unlock the power of LLMs while mitigating potential risks.

For businesses looking to implement these strategies effectively, working with experienced data analytics consulting services can provide the technical guidance and governance frameworks needed to ensure a secure, scalable LLM integration.