Highlights

- Microsoft Fabric unifies 7 different data workloads in one integrated platform

- Companies that make data-driven decisions are 23 times more likely to acquire customers

- Fabric includes everything from data engineering to real-time analytics natively

- Cost considerations differ dramatically from traditional Power BI licensing

- Migration complexity varies significantly based on current data architecture

Introduction

The data analytics landscape just shifted dramatically, and if you’re still thinking about Microsoft Fabric as “just another Power BI update,” you’re missing the bigger picture entirely.

When Microsoft announced Fabric at Build 2023, they weren’t just releasing another tool—they unveiled their vision for the future of data analytics. But here’s what caught my attention: while everyone was getting excited about the shiny new features, I started seeing the same pattern I’ve witnessed with countless analytics implementations over the past decade.

Organizations jumping in without understanding what they’re actually getting into.

What makes Microsoft Fabric different from Power BI?

Microsoft Fabric includes Power BI as one of seven integrated workloads—like data engineering, data warehouse, real-time analytics, and machine learning—unified under OneLake. It adds self-service tools, built-in governance, and a consumption-based pricing model that replaces per-user licensing.

Here’s the reality:

Microsoft Fabric isn’t just a new Microsoft data platform—it’s a fundamental reimagining of how data flows through your organization. And while that presents incredible opportunities, it also introduces complexities that can derail your entire data strategy if you’re not prepared.

The stakes are higher than ever. Companies using unified analytics platforms report better collaboration between data teams, but those same organizations also face higher implementation costs when they don’t plan properly from the start.

In this guide, I’ll walk you through the five essential things every organization must understand before adopting Microsoft Fabric. Whether you’re coming from Power BI, considering your first enterprise analytics platform, or evaluating a complete data ecosystem overhaul, these insights will help you make the right decision for your business.

Why Microsoft Fabric Matters More Than You Think

Let me start with something that might surprise you: Microsoft Fabric isn’t really about replacing what you’re currently using. It’s about fundamentally changing how your organization thinks about data.

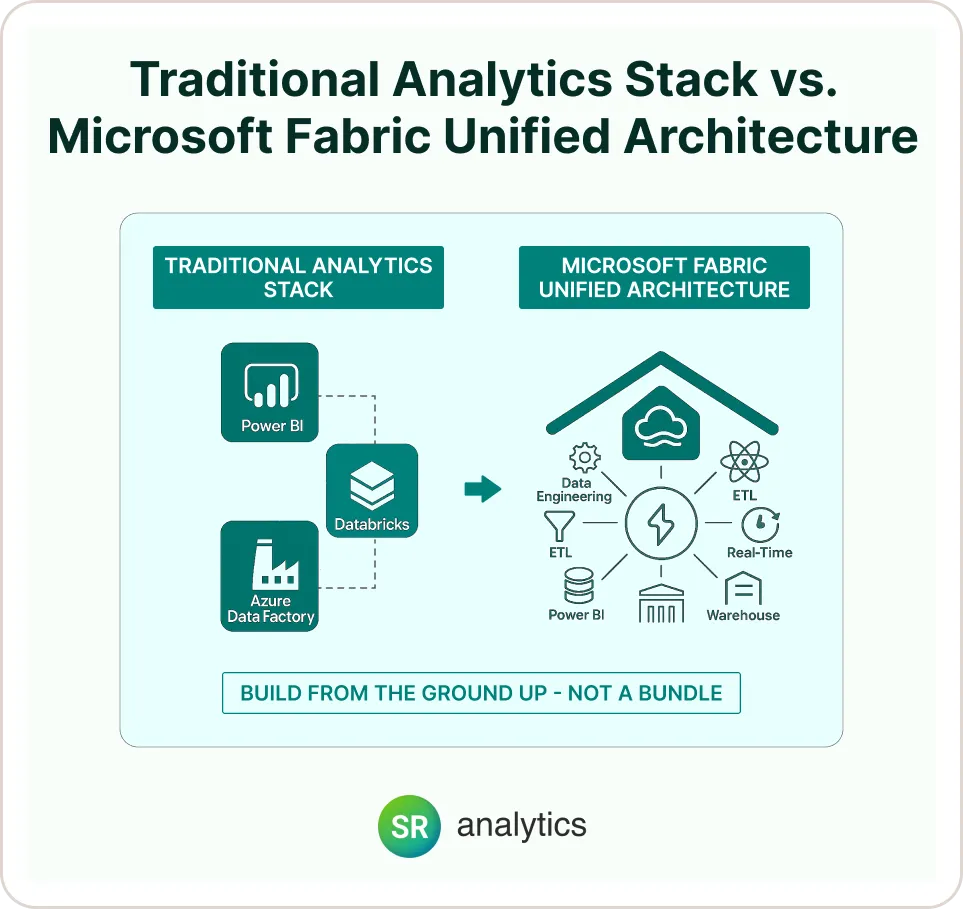

Traditional analytics setups force you to cobble together different tools for different jobs. You’ve got Power BI for visualizations, maybe Azure Data Factory for ETL, perhaps Databricks for data science work, and likely several other point solutions filling gaps. Each tool requires its own expertise, licensing, and maintenance.

Microsoft Fabric changes this equation by bringing seven core data workloads under one roof:

- Data Engineering (think Azure Data Factory + Databricks)

- Data Factory (ETL/ELT processes)

- Data Science (ML model development and deployment)

- Real-Time Analytics (streaming data and event processing)

- Data Warehouse (enterprise-scale data storage)

- Databases (operational data management)

- Power BI (business intelligence and reporting)

But here’s where it gets interesting: unlike typical “suite” products that just bundle existing tools together, Fabric was built from the ground up with a unified data foundation called OneLake. This means all your data lives in one place, accessible by all workloads, without the typical data movement penalties you’d face with separate systems.

The early results are compelling. Organizations using unified analytics platforms reduce overhead and improve decision-making through real-time insights, while unified data management software significantly reduces time and resources required for data processing. But—and this is crucial—these benefits only materialize when the implementation is done right.

Microsoft Fabric Adoption Trends and Implementation Insights (2025)

In 2025, Microsoft Fabric adoption is accelerating as enterprises seek unified data platforms—early statistics suggest significant interest, especially among Azure-native teams. Successful Microsoft Fabric implementation hinges on readiness for new skill sets, migration complexity, and Fabric cost optimization through consumption-based pricing. Organizations increasingly partner with Microsoft Fabric development services to streamline deployment, cut costs, and unlock real-time analytics at scale.

The 5 Critical Things You Must Know Before Adopting Microsoft Fabric

1. Fabric vs Power BI: Understanding What You’re Actually Getting

This is where most organizations get confused, and I don’t blame them. Microsoft’s messaging around Fabric vs Power BI can be misleading if you’re not paying close attention.

Here’s the straightforward truth: Power BI is now a workload within Microsoft Fabric, not a separate product. Think of it this way—if Power BI was your analytics car, Fabric is the entire transportation ecosystem including roads, traffic management, fuel stations, and maintenance centers.

What this means practically:

Power BI continues to exist as you know it, but within Fabric, it gains access to enterprise-scale compute, unified data governance, and seamless integration with other data workloads. Your existing Power BI reports and dashboards will continue working, but they can now tap into data processed by Fabric’s data engineering pipelines or machine learning models without any additional data movement.

The licensing reality check:

This is crucial for budgeting. Fabric licensing is consumption-based, meaning you pay for the compute and storage you actually use rather than per-user licensing like traditional Power BI. For organizations with heavy data processing needs, this can dramatically reduce costs. But for smaller teams primarily doing basic reporting, it might increase expenses.

Real-world implementation examples show significant value: Melbourne Airport reported that adopting Microsoft Fabric empowered their people with the right tools to make smarter, data-driven decisions daily, while Iceland Foods noted that “Fabric massively reduces data movement and preparation, and data is easily shared across different platforms.”

When Power BI alone might still be the right choice:

- Your team primarily creates reports and dashboards

- Data sources are relatively simple (Excel, SQL databases, cloud services)

- No immediate need for advanced data engineering or machine learning

- Budget constraints require predictable per-user licensing

When Fabric makes strategic sense:

- Multiple teams need different types of data workloads

- Complex data transformations and engineering pipelines

- Real-time analytics requirements

- Data science and machine learning initiatives planned

- Need for enterprise-scale data governance

2. The Real Microsoft Fabric Benefits (Beyond the Marketing Hype)

Let me cut through the marketing speak and tell you about the actual benefits of Microsoft Fabric that matter for real businesses.

Unified Data Governance That Actually Works

The biggest practical benefit I’ve seen is how Fabric handles data governance. In traditional setups, you’re managing security, lineage, and quality across multiple tools with different interfaces and standards. Fabric’s unified approach means one security model, one set of permissions, and one place to track how data flows through your organization.

Our Data Engineering Services team specializes in building secure, scalable architectures that align perfectly with platforms like Microsoft Fabric.

Organizations implementing unified data governance report significant time savings in coordination efforts across different platforms and tools.

True Self-Service Analytics

Here’s something most people miss: Fabric enables genuine self-service analytics for business users without requiring them to become data engineers. The unified semantic layer means business analysts can access the same cleaned, governed data that data scientists use for machine learning—without needing to understand complex data pipelines.

Elimination of Data Silos

This sounds like marketing fluff, but it’s genuinely transformative. When all your data workloads share the same underlying data lake (OneLake), you eliminate the typical ETL processes between different analytics tools. Data engineers can prepare data once, and it’s immediately available to Power BI reports, machine learning models, and real-time analytics without additional movement or transformation.

Integrated Machine Learning Workflows

If you’ve ever tried to get machine learning models from data science teams into business applications, you know the pain. Fabric’s integrated ML capabilities mean models can be developed, trained, and deployed within the same platform where your business intelligence operates, dramatically reducing time-to-value.

Cost Optimization Through Unified Computing

The consumption-based pricing model allows for better resource utilization. Instead of paying for dedicated Power BI Premium capacity that sits idle during off-peak hours, Fabric’s compute scales up and down based on actual usage across all workloads. Research shows that unified data management platforms can reduce total cost of ownership by 30-80% compared to on-premises deployments.

3. Migration Complexity: What Your Current Setup Means for Fabric Adoption

Your path to Microsoft Fabric depends heavily on where you’re starting from, and the complexity varies dramatically based on your current data architecture.

Starting from Power BI Pro/Premium:

This is typically the smoothest migration path. Your existing reports, datasets, and dashboards can be migrated to Fabric with minimal disruption. Power BI continues to exist within Fabric as one workload among seven others. The primary considerations are:

- Reconfiguring data refresh schedules to take advantage of Fabric’s enhanced capabilities

- Training teams on new collaboration features

- Gradually introducing advanced workloads as needed

Timeline: 2-4 months for basic migration, 6-12 months for full optimization

Starting from Azure Analytics Ecosystem:

If you’re already using Azure Data Factory, Azure Synapse, or Azure Machine Learning, Fabric migration involves consolidating these services. This can be complex but offers the highest potential value.

Key challenges:

- Restructuring existing data pipelines to work with OneLake

- Migrating custom code and configurations

- Retraining teams on unified interfaces

Timeline: 6-18 months depending on current complexity

Considering a migration from Azure or hybrid setups? Our Application Infrastructure Consulting services help streamline complex transitions.

Starting from Non-Microsoft Platforms:

Organizations using Tableau, Qlik, or other non-Microsoft analytics tools face the most complex migration path. This isn’t just a technology migration—it’s often a complete rethinking of data workflows.

Critical considerations:

- Data connector availability for your specific sources

- Rebuilding existing reports and dashboards

- Change management for teams accustomed to different interfaces

- Potential need for parallel systems during transition

Timeline: 12-24 months for complete migration

The Hidden Migration Costs

Beyond licensing, budget for:

- Training and change management (typically 20-30% of total project cost)

- Potential consultant or implementation partner fees

- Temporary parallel system costs during migration

- Data migration and validation efforts

Based on our experience with similar migrations, plan for 40-60% more time and budget than initial estimates to account for unexpected complexity and change management needs.

4. Skills and Team Readiness: The Make-or-Break Factor

Here’s something nobody talks about enough: Microsoft Fabric’s success in your organization depends more on your team’s readiness than the technology itself.

The New Skill Requirements

Fabric introduces a different way of thinking about data work that requires new skills even for experienced analytics professionals:

For Business Analysts:

- Understanding OneLake structure and data organization

- Working with unified semantic models across different workloads

- Collaborating effectively with data engineers and data scientists

- Leveraging Copilot and AI-assisted analytics features

For Data Engineers:

- Fabric-specific pipeline development using Data Factory

- Optimizing for OneLake storage patterns

- Understanding compute scaling and cost optimization

- Integration patterns between different Fabric workloads

For IT and Data Governance Teams:

- Unified security model management

- Capacity planning and cost monitoring

- Data lineage tracking across integrated workloads

- Backup and disaster recovery in the Fabric environment

The Training Investment Reality

Plan for significant training investment. Microsoft provides extensive documentation and learning paths, but practical expertise takes time to develop. Research shows that 50% of employees will need significant reskilling by 2025 as industries shift toward digital-first business models. Based on implementations I’ve guided, expect:

- Initial productivity drop: 20-30% decrease in team productivity for 3-6 months during learning curve

- Training time: 40-80 hours per team member for proficiency

- Expert development: 12-18 months to develop true Fabric expertise

Building Internal Capabilities vs. External Support

Many organizations underestimate the value of external expertise during Fabric adoption. While Microsoft’s documentation is comprehensive, real-world implementation involves countless decisions that significantly impact long-term success.

Consider hybrid approach: external consultants for initial implementation and architecture decisions, paired with aggressive internal skill development to maintain long-term capabilities.

5. Cost Modeling and ROI Calculations That Actually Matter

The biggest mistake I see organizations make is focusing solely on licensing costs without understanding the total economic impact of Microsoft Fabric adoption.

Understanding Fabric’s Consumption-Based Pricing

Unlike traditional per-user licensing, Fabric charges based on compute units (CUs) consumed. This creates both opportunities and risks:

Opportunities:

- Lower costs for organizations with variable usage patterns

- No penalty for adding more users to view reports and dashboards

- Ability to scale compute resources precisely to workload demands

Risks:

- Unpredictable monthly costs if usage isn’t properly managed

- Potential for runaway costs with poorly optimized data pipelines

- Complexity in budget planning and cost allocation

Real ROI Calculation Framework

To properly evaluate Fabric ROI, consider these often-overlooked factors:

Cost Savings:

- Eliminated licensing for replaced tools (Azure Data Factory, separate BI tools, etc.)

- Reduced data integration and ETL development costs

- Decreased infrastructure management overhead

- Lower training costs due to unified platform

Productivity Gains:

- Faster data pipeline development (typically 30-50% reduction in development time)

- Reduced time-to-insight for business users

- Elimination of data movement between different analytics tools

- Improved collaboration between data teams

Business Value Creation:

- Enhanced decision-making through integrated analytics

- New analytics capabilities previously unavailable

- Improved data governance and compliance

- Scalability for future data growth

Sample ROI Considerations:

Organizations evaluating Microsoft Fabric should consider these typical cost and benefit factors:

Annual Cost Factors:

- Current setup licensing (Power BI Premium + Azure services + maintenance)

- Fabric projected consumption costs (based on usage modeling)

- One-time implementation costs (training, migration, consulting)

Annual Benefit Categories:

- Productivity savings (reduced development and maintenance time)

- New capability value (real-time analytics enabling operational improvements)

- Infrastructure savings (reduced management overhead)

- Unified data management platforms can significantly reduce total cost of ownership compared to traditional deployments

ROI Timeline: Most organizations see positive returns within 12-24 months, with full value realization in 2-3 years

Critical Cost Management Strategies

- Implement monitoring from day one: Fabric’s consumption model requires active monitoring to prevent cost overruns

- Optimize data pipelines: Poorly designed pipelines can dramatically increase compute costs

- Right-size capacity: Start conservative and scale based on actual usage patterns

- Establish governance: Clear policies for who can create compute-intensive workloads

Common Implementation Pitfalls and How to Avoid Them

After guiding numerous Fabric implementations, I’ve seen the same mistakes repeated across different organizations. Here are the most critical ones to avoid:

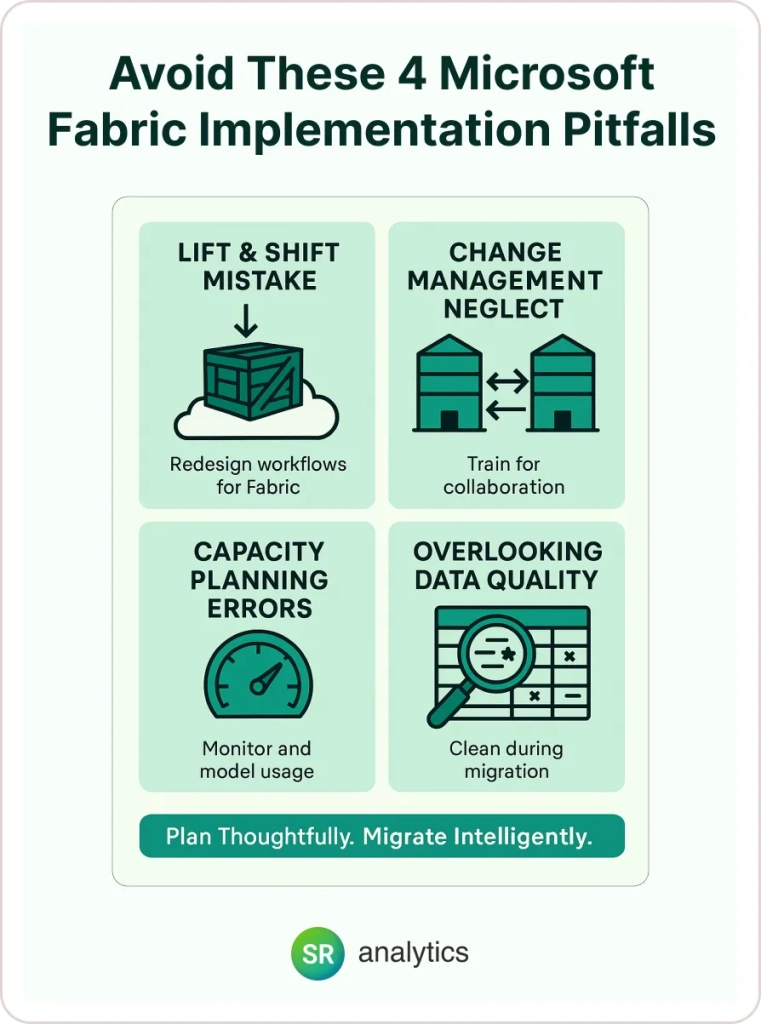

Pitfall 1: Treating Fabric as a “Lift and Shift” Migration

Many organizations attempt to simply recreate their existing analytics setup within Fabric without taking advantage of its unified architecture. This approach eliminates most of the platform’s benefits while introducing unnecessary complexity.

Solution: Redesign your data workflows to leverage OneLake and cross-workload integration from the beginning.

Pitfall 2: Underestimating Change Management

Technical migration is often straightforward compared to the organizational change required. Teams accustomed to working in silos struggle with Fabric’s collaborative approach.

Solution: Invest heavily in change management and cross-team collaboration training, not just technical training.

Pitfall 3: Inadequate Capacity Planning

Fabric’s consumption-based model can lead to cost surprises if usage patterns aren’t properly understood and planned for.

Solution: Conduct thorough usage analysis before migration and implement robust monitoring and governance from day one.

Pitfall 4: Ignoring Data Quality During Migration

The migration process often exposes existing data quality issues that were previously hidden by complex ETL processes.

Solution: Treat migration as an opportunity to improve data quality, not just move existing problems to a new platform.

Is Microsoft Fabric Right for Your Organization?

Based on my experience implementing Fabric across different industries and organization sizes, here’s my honest assessment of when Fabric makes sense and when it doesn’t.

Microsoft Fabric is likely a good fit if:

- You currently use multiple Microsoft data and analytics tools

- Your organization has or plans to have dedicated data engineering resources

- You need real-time analytics capabilities

- Data governance and collaboration between data teams is a priority

- You’re planning to expand analytics capabilities beyond basic reporting

- Your data volumes and complexity are growing significantly

Stick with your current setup if:

- Your analytics needs are primarily basic reporting and dashboards

- You have a small team (fewer than 5 people) working with data

- Budget constraints require predictable, fixed costs

- Your organization isn’t ready for significant process changes

- You’re heavily invested in non-Microsoft analytics tools that meet your needs

Consider a phased approach if:

- You want to test Fabric capabilities without full commitment

- Your team needs time to develop new skills

- Budget approval for full implementation will take time

- You need to prove value before larger organizational commitment

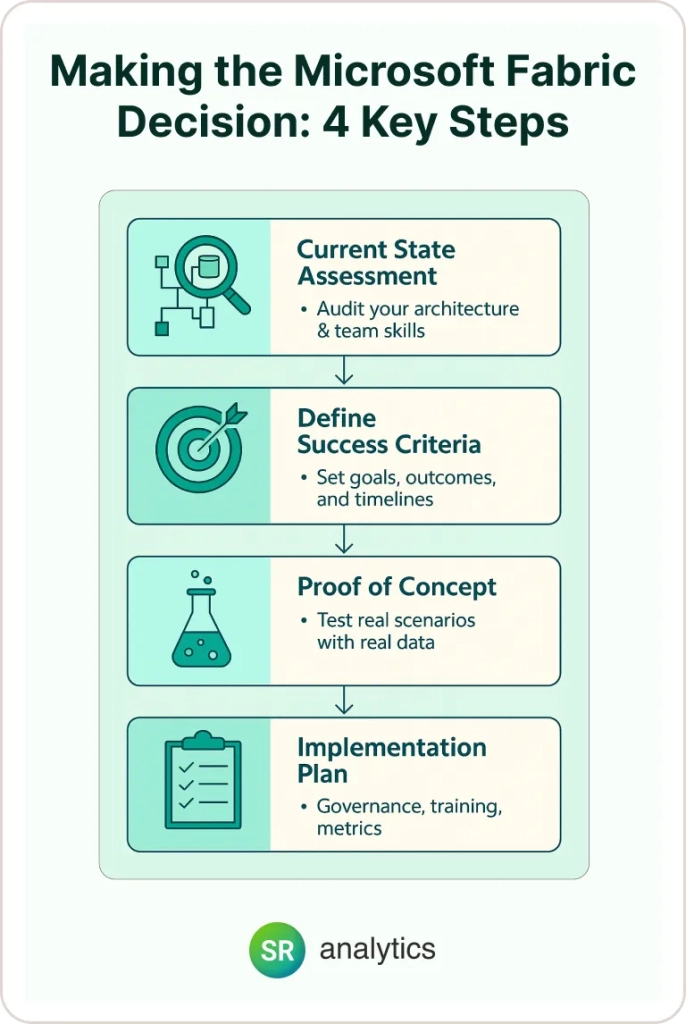

Making the Decision: Your Next Steps

If you’ve made it this far, you’re clearly serious about evaluating Microsoft Fabric for your organization. Here’s how to move forward systematically:

Step 1: Conduct an Honest Current State Assessment

- Document your existing data architecture and analytics workflows

- Identify pain points and limitations in your current setup

- Catalog skills and capabilities within your data team

- Calculate current total cost of ownership for all data and analytics tools

Step 2: Define Success Criteria

- Establish clear business objectives for analytics platform changes

- Define measurable outcomes you expect from Fabric adoption

- Set realistic timelines for implementation and value realization

- Identify key stakeholders and their requirements

Step 3: Run a Proof of Concept

- Start with a limited scope pilot project

- Test Fabric capabilities with real data and use cases

- Evaluate team adaptation and learning curve

- Measure actual performance and cost implications

Step 4: Develop a Comprehensive Implementation Plan

- Create detailed migration timeline and resource requirements

- Plan for training and change management

- Establish governance and monitoring frameworks

- Define success metrics and review checkpoints

The new Microsoft data platform represents a significant evolution in how organizations can approach analytics, but success depends on thoughtful planning and realistic expectations. The unified approach offers genuine advantages for organizations ready to embrace integrated data workflows, but it’s not a silver bullet for every analytics challenge.

Your decision should be based on your specific requirements, team capabilities, and strategic objectives—not on the latest technology trends or marketing promises. As McKinsey research shows, companies that make data-driven decisions are 23 times more likely to acquire customers and six times more likely to retain them, but success depends on choosing the right platform for your organization’s needs.